2016 Media Shipments

|

Exabytes |

Revenue |

$/GB |

| Flash | 120 | $38.7B | $0.320 |

| Hard Disk | 693 | $26.8B | $0.039 |

| LTO Tape | 40 | $0.65B | $0.016 |

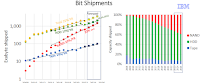

Six years ago I wrote

Archival Media: Not a Good Business and included this

table. The argument went as follows:

- The value that can be extracted from data decays rapidly with time.

- Thus companies would rather invest in current than archival data.

- Thus archival media and systems are a niche market.

- Thus archival media and systems lack the manufacturing volume to drive down prices.

- Thus although quasi-immortal media have low opex (running cost), they have high capex (purchase cost).

- Especially now interest rates are non-zero, the high capex makes the net present value of their lifetime cost high.

- Archival media compete with legacy generic media, which have mass-market volumes and have already amortized their R&D, so have low capex but higher opex through their shorter service lives.

- Because they have much higher volumes and thus much more R&D, generic media have much higher Kryder rates, meaning that although they need to be replaced over time, each new unit at approximately equal cost replaces several old units, reducing opex.

- Especially now interest rates are non-zero, the net present value of the lower capex but higher opex is likely very competitive.

Below the fold I look into why, despite this, Microsoft has been pouring money into archival system R&D for about a decade.

Background

Eleven years ago Facebook announced they were building entire

data centers for cold storage. They expected the major reason for reads accessing this data would be subpoenas. Eighteen months later I was finally able to report on Kestutis Patiejunas' talk explaining the technology and why it made sense in

More on Facebook's "Cold Storage". They used two different technologies, the first exploiting legacy generic media in the form of mostly-powered-down hard drives. Facebook's

design for this was:

aimed at limiting the worst-case power draw. It exploits the fact that this storage is at the bottom of the storage hierarchy and can tolerate significant access latency. Disks are assigned to groups in equal numbers. One group of disks is spun up at a time in rotation, so the worst-case access latency is the time needed to cycle through all the disk groups. But the worst-case power draw is only that for a single group of disks and enough compute to handle a single group.

Why is this important? Because of the synergistic effects knowing the maximum power draw enables. The power supplies can be much smaller, and because the access time is not critical, need not be duplicated. Because Facebook builds entire data centers for cold storage, the data center needs much less power and cooling. It can be more like cheap warehouse space than expensive data center space. Aggregating these synergistic cost savings at data center scale leads to really significant savings.

Patiejunas figured out that, only at cloud scale, the economics of archival storage could be made to work by reducing the non-media, non-system costs. This insight led to the second technology,

robots full of long-lived optical media:

It has 12 Blu-ray drives for an entire rack of cartridges holding 10,000 100TGB Blu-ray disks managed by a robot. When the robot loads a group of 12 fresh Blu-ray disks into the drives, the appropriate amount of data to fill them is read from the currently active hard disk group and written to them. This scheduling of the writes allows for effective use of the limited write capacity of the Blu-ray drives. If the data are ever read, a specific group has to be loaded into the drives, interrupting the flow of writes, but this is a rare occurrence. Once all 10,000 disks in a rack have been written, the disks will be loaded for reads infrequently. Most of the time the entire Petabyte rack will sit there idle.

In theory Blu-ray disks have a 50-year life, but this is

irrelevant:

No-one expects the racks to sit in the data center for 50 years, at some point before then they will be obsoleted by some unknown new, much denser and more power-efficient cold storage medium

Eight years ago in

The Future Of Storage I explained:

Every few months there is another press release announcing that some new, quasi-immortal medium such as 5D quartz or stone DVDs has solved the problem of long-term storage. But the problem stays resolutely unsolved. Why is this? Very long-lived media are inherently more expensive, and are a niche market, so they lack economies of scale. Seagate could easily make disks with archival life, but they did a study of the market for them, and discovered that no-one would pay the relatively small additional cost. The drives currently marketed for "archival" use have a shorter warranty and a shorter MTBF than the enterprise drives, so they're not expected to have long service lives.

The fundamental problem is that long-lived media only make sense at very low Kryder rates. Even if the rate is only 10%/yr, after 10 years you could store the same data in 1/3 the space. Since space in the data center racks or even at Iron Mountain isn't free, this is a powerful incentive to move old media out. If you believe that Kryder rates will get back to 30%/yr, after a decade you could store 30 times as much data in the same space.

The key parameter of these archival storage systems isn't the media life, it is the write bandwidth needed to keep up with the massive flow of

data to be archived:

While a group of disks is spun up, any reads queued up for that group are performed. But almost all the I/O operations to this design are writes. Writes are erasure-coded, and the shards all written to different disks in the same group. In this way, while a group is spun up, all disks in the group are writing simultaneously providing huge write bandwidth. When the group is spun down, the disks in the next group take over, and the high write bandwidth is only briefly interrupted.

The lesson Facebook taught a decade ago was that the keys to cost-effective archival storage were first, massive scale, and second, high write bandwidth. Microsoft has learned the lesson and has been working to develop cloud-scale, high write bandwidth systems using two quasi-immortal media, DNA and silica.

DNA

I have been tracking the development of DNA as a storage medium since 2012, when I wrote

Forcing Frequent Failures based on a

Harvard team writing 700KB. Much of the recent progress has been driven by the collaboration between

Microsoft Research and the University of Washington. In 2019 they published the first

automated write-to-read prototype system (pictured),

which was slow:

This single-channel device, which occupied a tabletop, had a throughput of 5 bytes over approximately 21 hours, with all but 40 minutes of that time consumed in writing “HELLO” into the DNA.

Rob Carlson's

The Quest for a DNA Data Drive provides a useful overview of the current state of the art. Alas he starts by using one of my pet hates, the graph showing an immense gap between the "requirements" for data storage and the production of storage media. Carlson

captions the graph:

Prior projections for data storage requirements estimated a global need for about 12 million petabytes of capacity by 2030. The research firm Gartner recently issued new projections, raising that estimate by 20 million petabytes. The world is not on track to produce enough of today’s storage technologies to fill that gap.

<rant> Carlson's point is to suggest that there is a huge market for DNA storage. But this ignores economics. There will always be a "requirement" to store more data than the production of storage media, because some data is not valuable enough to justify the cost of storing it. The "gap" could only be filled if media were free. Customers will buy the storage systems they can afford and prioritize the data in them according to the value that can be extracted from it.

</rant>.

The size of the market for DNA storage systems depends upon their cost. In 2018's

DNA's Niche in the Storage Market I imagined myself as the marketing person for a DNA storage system and posed these challenges:

Engineers, your challenge is to increase the speed of synthesis by a factor of a quarter of a trillion, while reducing the cost by a factor of fifty trillion, in less than 10 years while spending no more than $24M/yr.

Finance team, your challenge is to persuade the company to spend $24M a year for the next 10 years for a product that can then earn about $216M a year for 10 years.

Carlson is realistic about the

engineering challenges:

For a DNA drive to compete with today’s archival tape drives, it must be able to write about 2 gigabits per second, which at demonstrated DNA data storage densities is about 2 billion bases per second. To put that in context, I estimate that the total global market for synthetic DNA today is no more than about 10 terabases per year, which is the equivalent of about 300,000 bases per second over a year. The entire DNA synthesis industry would need to grow by approximately 4 orders of magnitude just to compete with a single tape drive.

One of the speed-ups that is needed is

chip-based massive parallelism:

One of our goals was to build a semiconductor chip to enable high-density, high-throughput DNA synthesis. That chip, which we completed in 2021, demonstrated that it is possible to digitally control electrochemical processes in millions of 650-nanometer-diameter wells.

It was faster, but it's chemistry had

problems:

The main problem is that it employs a volatile, corrosive, and toxic organic solvent (acetonitrile), which no engineer wants anywhere near the electronics of a working data center.

Moreover, based on a sustainability analysis of a theoretical DNA data center performed my colleagues at Microsoft, I conclude that the volume of acetonitrile required for just one large data center, never mind many large data centers, would become logistically and economically prohibitive.

Although it is the industry standard, this isn't the only way to

write DNA:

Fortunately, there is a different emerging technology for constructing DNA that does not require such solvents, but instead uses a benign salt solution. Companies like DNA Script and Molecular Assemblies are commercializing automated systems that use enzymes to synthesize DNA. These techniques are replacing traditional chemical DNA synthesis for some applications in the biotechnology industry. The current generation of systems use either simple plumbing or light to control synthesis reactions. But it’s difficult to envision how they can be scaled to achieve a high enough throughput to enable a DNA data-storage device operating at even a fraction of 2 gigabases per second.

The Microsft/UW chip is an

alternative way to control enzymatic synthesis:

The University of Washington and Microsoft team, collaborating with the enzymatic synthesis company Ansa Biotechnologies, recently took the first step toward this device. Using our high-density chip, we successfully demonstrated electrochemical control of single-base enzymatic additions.

The link is to

Spatially Selective Electrochemical Cleavage of a Polymerase-Nucleotide Conjugate by Jake A. Smith

et al:

Novel enzymatic methods are poised to become the dominant processes for de novo synthesis of DNA, promising functional, economic, and environmental advantages over the longstanding approach of phosphoramidite synthesis. Before this can occur, however, enzymatic synthesis methods must be parallelized to enable production of multiple DNA sequences simultaneously. As a means to this parallelization, we report a polymerase-nucleotide conjugate that is cleaved using electrochemical oxidation on a microelectrode array. The developed conjugate maintains polymerase activity toward surface-bound substrates with single-base control and detaches from the surface at mild oxidative voltages, leaving an extendable oligonucleotide behind. Our approach readies the way for enzymatic DNA synthesis on the scale necessary for DNA-intensive applications such as DNA data storage or gene synthesis.

This has, as the article points out, potential for dramatically reducing the current cost of writing DNA, but it is still many orders of magnitude away from being competitive with a tape drive. The

good Dr. Pangloss can continue to enjoy the optimism for many more years.

Silica

The idea of writing data into fused silica with a femtosecond laser is at least a

decade and a half old

In 2009, Hitachi focused on fused silica known for its excellent heat and water resistance as a recording medium for long-term digital storage. After proposing the use of computed tomography to read data recorded with a femtosecond pulse laser, fused silica glass was confirmed as an effective storage medium. Applying this technology, it is possible to achieve multi-layer recording by changing the laser’s focal point to form microscopic regions (dots) with differing refractive indices. In 2012, a method was developed with Kyoto University to read the recorded dots in 4 layers using an optical microscope (recording density equivalent to a CD), and in 2013, 26 layer recording was achieved (recording density equivalent to a DVD). In order to increase recording density for practical applications, one means is to increase the number of recording layers. At the 100-layer level of recording density equivalent to a Blu-ray disc however issues arose in dot quality degradation and read errors resulting from crosstalk of data recorded in other layers.

But in the last few years Microsoft Research has taken this idea and run with it, as they report in a 68-author paper at SOSP entitled

Project Silica: Towards Sustainable Cloud Archival Storage in Glass. It is a fascinating paper that should be read by anyone interest in archival storage.

Like

me, the authors are skeptical of the near-term prospects for

DNA storage:

Technologies like DNA storage offer the promise of an extremely dense media for long-term data storage. However, the high costs and low throughputs of oligonucleotide synthesis and sequencing continue to hamper the technology’s feasibility. The total amount of data demonstrably stored in DNA remains on the order of MBs, and building a functional storage system that can offer reasonable SLAs underpinned by DNA is a substantial challenge. Alternative DNA-based technologies like dNAM attempt to bypass costly sequencing and synthesis steps, sacrificing density down to densities comparable with magnetic tape.

Hence their focus on a medium with, in theory, a somewhat lower volumetric density. From their

abstract:

This paper presents Silica: the first cloud storage system for archival data underpinned by quartz glass, an extremely resilient media that allows data to be left in situ indefinitely. The hardware and software of Silica have been co-designed and co-optimized from the media up to the service level with sustainability as a primary objective. The design follows a cloud-first, data-driven methodology underpinned by principles derived from analyzing the archival workload of a large public cloud service.

Their analysis of the workload of a tape-based cloud archival storage system in Section 2

shows that:

on average for every MB read there are 47 MBs written, and for every read operation there are 174 writes. We can see some variation across months, but writes always dominate by over an order of magnitude.

...

Small files dominate the workload, with 58.7% of the reads for files of 4 MiB or smaller. However, these reads only contribute 1.2% of the volume of data read. Files larger than 256 MiB comprise around 85% of bytes read but less than 2% of total read requests. Additionally, there is a long tail of request sizes: there is ∼ 10 orders of magnitude between the smallest and largest requested file sizes.

...

We observe a variability in the workload within data centers, with up to 7 orders of magnitude difference between the median and the tail, as well as large variability across different data centers.

...

At the granularity of a day, the peak daily [ingress] rate is ∼16x higher than the mean daily rate. As the aggregation time increases beyond 30 days, the peak over mean ratio decreases significantly down to only ∼2, indicating that the average write rate is similar across different 30-day windows.

...

To summarize, as expected for archival storage, the workload is heavily write-dominated. However, unexpectedly, the IO operations are dominated by small file accesses.

Subramanian Muralidhar and a team from Facebook, USC and Princeton had a paper at the 2014 OSDI that described Facebook's warm layer above the two cold storage layers and below

Haystack, the hot storage layer. Section 3 of

f4: Facebook's Warm BLOB Storage System provides workload data for this layer, which filters out most of the IOPS before they hit the archival layers. I explained in

Facebook's Warm Storage that:

A BLOB is a Binary Large OBject. Each type of BLOB contains a single type of immutable binary content, such as photos, videos, documents, etc.

...

Figure 3 shows that the rate of I/O requests to BLOBs drops rapidly through time. The rates for different types of BLOB drop differently, but all 9 types have dropped by 2 orders of magnitude within 8 months, and all but 1 (profile photos) have dropped by an order of magnitude within the first week.

The vast majority of Facebook's BLOBs are warm, as shown in Figure 5 - notice the scale goes from 80-100%. Thus the vast majority of the BLOBs generate I/O rates at least 2 orders of magnitude less than recently generated BLOBs.

Note these important differences between Microsoft's and Facebook's storage hierarchies:

- Microsoft stores generic data and depends upon user action to migrate it down the hierarchy to the archive layer, whereas Facebook stores 9 specific types of application data which is migrated automatically based upon detailed knowledge of the workload for each of the types.

- Because Facebook can migrate data automatically, it can interpose a warm layer above the archive layer of the hierarchy, and because it has detailed knowledge about the behavior of each of the data types it can make good decision about when to move each type down the hierarchy.

- Because the warm layer responds to the vast majority of the read requests and schedules the downward migrations, Facebook's archive layer's IOPS are a steady flow of large writes with very few reads, making efficient use of the hardware.

Contrast Facebook's scheduled ingest flow with the bursty ingest rate shown in Figure 2 of the Silica paper,

which finds that:

At the granularity of a day, the peak daily rate is ∼16x higher than the mean daily rate.

Another interesting aspect of the Silica design is that the technologies, and thus the hardware, used for writing and reading are completely different. The authors point out the

implications for the design:

As different technologies are used to read and write, after a platter is written it must be fully read using the same technology that will be used to read it subsequently. This happens before a platter is stored in the library and any staged write data is deleted.

This design has an interesting consequence: during the period when user data is being written into the library, the workload is going to become read-dominated. Every byte written must be read to be verified, in addition to the user reads. The read bandwidth has to be provisioned for peak user read rate, however the read workloads are very bursty, so read drive utilization is extremely low on average. Thus, the verification workload simply utilizes what would otherwise be idle read drives.

Using separate write and read drives has

two advantages:

This allows independent scaling of read and write throughput. Additionally, this design allows us to create the first storage system that offers true air-gap-by-design: the robotics are unable to insert a glass platter into a write drive once the glass media has been written.

Since the majority of reads are for verification, the design needs to make specific provision for

user reads:

To enable high drive efficiency, two platters can be mounted simultaneously in a read drive: one undergoing verification, and one servicing a customer read. Customer traffic is prioritized over verification, with the read drive switching away when a platter is mounted for a customer read. As soon as the customer platter stops being accessed, the read drive has the ability to quickly switch to the other platter and continue verification. This is similar to avoiding head-of-line blocking of mice flows by elephant flows in networked systems.

Like Facebook's, the prototype Silica systems are

data-center size:

A Silica library is a sequence of contiguous write, read, and storage racks interconnected by a platter delivery system. Along all racks there are parallel horizontal rails that span the entire library. We refer to a side of the library (spanning all racks) as a panel. A set of free roaming robots called shuttles are used to move platters between locations.

...

A read rack contains multiple read drives. Each read drive is independent and has slots into which platters are inserted and removed. The number of shuttles active on a panel is limited to twice the number of read drives in the panel. The write drive is full-rack-sized and writes multiple platters concurrently.

Their performance evaluation focuses on the ability to respond to read requests within 15 hours. Their cost evaluation, like Facebook's, focuses on the savings from using warehouse-type space to house the equipment, although is isn't clear that they have actually done so. The rest of their cost evaluation is somewhat hand-wavy, as is natural for a system that

isn't yet in production:

The Silica read drives use polarization microscopy, which is a commoditized technique widely used in many applications and is low-cost. Currently, system cost in Silica is dominated by the write drives, as they use femtosecond lasers which are currently expensive and used in niche applications. This highlights the importance of resource proportionality in the system, as write drive utilization needs to be maximized in order to minimize costs. As the Silica technology proliferates, it will drive up the demand for femtosecond lasers, commoditizing the technology.

I'm skeptical of this last point. Archival systems are a niche in the IT market, and one on which companies are loath to spend money, The only customers for systems like Silica are the large cloud providers, who will be reluctant to commit their archives to technology owned by a competitor. Unless a mass-market application for femtosecond lasers emerges, the scope for cost reduction is limited.

Conclusion

Six years ago

I wrote:

time-scales in the storage industry are long. Disk is a 60-year-old technology, tape is at least 65 years old, CDs are 35 years old, flash is 30 years old and has yet to impact bulk data storage.

Six years on flash has finally impacted the bulk storage market, but it isn't predicted to ship as many bits as hard disks for another four years, when it will be a 40-year-old technology. Actual demonstrations of DNA storage are only 12 years old, and similar demonstrations of silica media are 15 years old. History suggests it will be decades before these technologies impact the storage market.

3 comments:

"To put that in context, I estimate that the total global market for synthetic DNA today is no more than about 10 terabases per year, which is the equivalent of about 300,000 bases per second over a year."

As a person who works in synthetic biology, the cheaper you make it the more of it we'll buy.

There are other bottlenecks that would start hitting once you speed up synthesis (DNA assembly, sequencing, and the downstream assays that the DNA is used for). Though of course work is being put into this as well.

It would be possible to synthesize massive random, or stochastically controlled semi-random sequences of DNA in a single tube in far greater throughput, and then isolate these DNA molecules in arrays, and probably also identify their sequences at the same time. I wonder if something like this could be made useful for data storage.

"The developed conjugate maintains polymerase activity toward surface-bound substrates with single-base control and detaches from the surface at mild oxidative voltages, leaving an extendable oligonucleotide behind."

Enzymes are tiny, but nucleotides are even tinier. When I learn about enzymatic technologies they basically all throw away the enzyme to attach a single nucleotide. It's on the order of throwing away a range after cooking a single meal. But then PCR allows us to amplify that single DNA molecule millions and billions of times.

The cost of enzymes is small enough that even throwing them away is pretty cheap (probably less than the cost of the nuclease-free water used to wash them away). Still I do know that there are some enzymes covalently bound to magnetic beads for ease of removal. Such technologies may some day facilitate synthetic enzyme reuse.

When you say "10,000 100TB Blu-ray disks", do you mean 100 GB disks?

Good catch, Yarrow. Thanks!

Post a Comment