|

| Source |

I'm David Rosenthal, and this is a place to discuss the work I'm doing in Digital Preservation.

Wednesday, December 27, 2023

Make Up Your Mind

Tuesday, December 26, 2023

There Is No Planet B: Part 2

|

| Source |

- Kelley and Zach Weinersmith's A City on Mars.

- Towards Sustainable Horizons: A Comprehensive Blueprint for Mars Colonization by Florian Neukart.

Tuesday, December 12, 2023

Why Worry About Resources?

The attitude of the crypto-bros and tech more generally is that they are going to make so much money that paying for whatever resource they need to make it will be a drop in the ocean. Amd that externalities such as carbom emissions are someone else's problem.

I discussed Proof-of-Work's scandalous waste of energy in my EE380 talk, Can We Mitigate Cryptocurrencies' Externalities? and elsewhere, since 2017 often citing the work of Alex de Vries. Two years ago de Vries and Christian Stoll's Bitcoin's growing e-waste problem pointed out that in addition to mining rig's direct waste of power, their short economic life drove a massive e-waste problem, adding the embedded energy of the hardware to the problem.

Now, de Vries' Bitcoin’s growing water footprint reveals that supporting gambling, money laundering and crime causes yet another massive waste of resources.

But that's not all. de Vries has joined a growing chorus of researchers showing that the VC's pivot to AI wastes similar massive amounts of power. Can analysis of AI's e-waste and water consumption be far behind?

Below the fold I discuss papers by de Vries and others on this issue.

I discussed Proof-of-Work's scandalous waste of energy in my EE380 talk, Can We Mitigate Cryptocurrencies' Externalities? and elsewhere, since 2017 often citing the work of Alex de Vries. Two years ago de Vries and Christian Stoll's Bitcoin's growing e-waste problem pointed out that in addition to mining rig's direct waste of power, their short economic life drove a massive e-waste problem, adding the embedded energy of the hardware to the problem.

|

| de Vries Fig. 1 |

But that's not all. de Vries has joined a growing chorus of researchers showing that the VC's pivot to AI wastes similar massive amounts of power. Can analysis of AI's e-waste and water consumption be far behind?

Below the fold I discuss papers by de Vries and others on this issue.

Thursday, November 30, 2023

There Is No Planet B: Part 1

.jpg) |

| Source CC-BY-SA-4.0 |

Below the fold in part 1 of this two-part post, I apply some arithmetic just to the logistics of Musk's plans for Mars. Part 2 isn't specific to Musk's plans; I discuss two attempts to list the set of "knowns" about Mars exploration, for which the science is fairly clear but the engineering and the economics don't exist, and the much larger set of "known unknowns", critical aspects requiring robust solutions for which the science, let alone the engineering, doesn't exist:

- Kelley and Zach Weinersmith's A City on Mars.

- Maciej Cegłowski's Why Not Mars?.

Tuesday, November 28, 2023

Decentralized Finance Isn't

A major theme of this blog since 2014's Economies of Scale in Peer-to-Peer Networks has been that decentralized systems aren't, because economic forces overwhelm the technologies of decentralization. Last year I noted that this rule applied to Decentralized Finance (DeFi) in Shadow Banking 2.0 based on Prof. Hilary Allen's DeFi: Shadow Banking 2.0? which she summarizes thus:

TL;DR: DeFi is neither decentralized, nor very good finance, so regulators should have no qualms about clamping down on it to protect the stability of our financial system and broader economy.And also DeFi risks and the decentralisation illusion by Sirio Aramonte, Wenqian Huang and Andreas Schrimpf of the Bank for International Settlements who write:

While the main vision of DeFi’s proponents is intermediation without centralised entities, we argue that some form of centralisation is inevitable. As such, there is a “decentralisation illusion”. First and foremost, centralised governance is needed to take strategic and operational decisions. In addition, some features in DeFi, notably the consensus mechanism, favour a concentration of power.Below the fold I look at new evidence that the process of centralizing DeFi is essentially complete.

Tuesday, November 21, 2023

Desperately Seeking Retail

|

| Source |

abnormal first-significant-digit distributions, size rounding, and transaction tail distributions on unregulated exchanges reveal rampant manipulations unlikely driven by strategy or exchange heterogeneity. We quantify the wash trading on each unregulated exchange, which averaged over 70% of the reported volume.Unfortunately, despite knowing about the manipulation, the CFTC approved Bitcoin futures ETFs. Among the requests that the SEC refused was one from Grayscale Bitcoin Trust. Grayscale sued the SEC and, back in August, the panel of judges ruled in their favor:

The court's panel of judges said Grayscale showed that its proposed bitcoin ETF is "materially similar" to the approved bitcoin futures ETFs. That's because the underlying assets— bitcoin and bitcoin futures - are "closely correlated," and because the surveillance sharing agreements with the CME are "identical and should have the same likelihood of detecting fraudulent or manipulative conduct in the market for bitcoin."The prospect of the SEC approving this ETF and others from, for example, BlackRock and Fidelity led to something of a buying frenzy in Bitcoin as shown in the "price" chart, and in headlines such as Bitcoin ETF Exuberance Drives Four-Week ‘Nothing for Sale’ Rally:

With that in mind, the court ruled that the SEC was "arbitrary and capricious" to reject the filing because it "never explained why Grayscale owning bitcoins rather than bitcoin futures affects the CME’s ability to detect fraud."

Bitcoin is climbing for a fourth consecutive week, with the digital token’s price lingering just below an 18-month high of $38,000, as more investors bet that US exchange-traded funds that hold the largest cryptocurrency are on the verge of winning regulatory approval.Below the fold I look into where this euphoria came from, and why it might be misplaced

Thursday, November 16, 2023

NDSA Sustainability Excellence Award

Yesterday, at the DigiPres conference, Vicky Reich and I were awarded a "Sustainability Excellence Award" by the National Digital Stewardship Alliance. This is a tribute to the sustained hard work of the entire LOCKSS team over more than a quarter-century.

Yesterday, at the DigiPres conference, Vicky Reich and I were awarded a "Sustainability Excellence Award" by the National Digital Stewardship Alliance. This is a tribute to the sustained hard work of the entire LOCKSS team over more than a quarter-century.Below the fold are the citation and our response.

Tuesday, November 14, 2023

Alameda's On-Ramp

Tether has been one of the major mysteries of the cryptosphere for a long time. It has never been audited, and has been described as being "practically quilted out of red flags". Matt Levine says "I feel like eventually Tether is going to be an incredibly interesting story, but I still don’t know what it is." He was commenting on Emily Nicolle's Bankman-Fried Trial Renews Conjecture About Alameda’s $40 Billion Tether Stablecoin Pile by Emily Nicolle. It includes a lot of interesting information, starting with this:

Alameda was Tether’s largest non-exchange customer between 2020 and 2022, with blockchain data showing it received almost $40 billion in transfers of its stablecoin USDT directly from the company — equal to roughly 20% of all USDT tokens ever issued.Below the fold, I discuss the questions Nicolle raises, and go on to ask one she doesn't

Thursday, November 9, 2023

Robotaxi Economics

|

| Source |

Tuesday, November 7, 2023

My Old Car

This post celebrates my weird old car's 30thbirthday. It is a Mazda RX-7 dated November 1993, carrying the California license RX7 DSHR. I bought it new and in the almost 30 years since have driven it for nearly 140K miles. Unusually for an RX-7 this old, it is almost completely stock and has never been on a track.

This post celebrates my weird old car's 30thbirthday. It is a Mazda RX-7 dated November 1993, carrying the California license RX7 DSHR. I bought it new and in the almost 30 years since have driven it for nearly 140K miles. Unusually for an RX-7 this old, it is almost completely stock and has never been on a track.Below the fold, I recount an RX-7 saga spanning thirty-eight years and well over a quarter-million miles.

Thursday, November 2, 2023

Limited Liability

Regulation works by assigning liability for actions to specific actors. The whole idea of decentralization is that by diffusing responsibility among a large number of participants the system could evade regulation. Each participant would bear such a small part of the responsibility for the system's actions that enforcing liability for the actions to each of them would be infeasible. The problem with this is that, for economic reasons, the Gini coefficients of cryptocurrencies are extremely large. It is true that most of the participants have only a small part of the responsibility, but there are always some who have a large share and are thus worth enforcing liability against.

In Piercing The Veil I discussed a Commodity Futures Trading Commission enforcement action in which the bZeroX exchange converted itself into a DAO:

In Piercing The Veil I discussed a Commodity Futures Trading Commission enforcement action in which the bZeroX exchange converted itself into a DAO:

By transferring control to a DAO, bZeroX’s founders touted to bZeroX community members the operations would be enforcement-proof—allowing the Ooki DAO to violate the CEA and CFTC regulations with impunity, as alleged in the federal court action. The order finds the DAO was an unincorporated association of which Bean and Kistner were actively participating members and liable for the Ooki DAO’s violations of the CEA and CFTC regulations.Below the fold I discuss a post in which Matt Levine reports on a more recent lawsuit making the same argument.

Tuesday, October 31, 2023

Shitcoins

|

| Source |

Trading volume on most exchanges has plummeted in the past year, even as the number of coins has continued to multiply, with more than 1.8 million tokens listed on centralized and decentralized exchanges.Kharif reported on the collapse of trading volume earlier in Coinbase’s Quarterly Crypto Trading Volume Likely Lowest Since Before Public Debut:

The largest US digital-asset platform registered about $76 billion in spot trading volume, a drop of 52% from the year-ago period, according to data compiled by researcher CCData. The tally is also likely the least since before the company’s much ballyhooed direct listing on the Nasdaq Stock Market in April 2021, or just months before prices of cryptocurrencies peaked.Thus what caught my eye wasn't the drop in trading volume, it was "more than 1.8 million tokens"! Below the fold I ask how this could possibly make sense.

Tuesday, October 24, 2023

Elon Musk: Threat or Menace Part 3

|

| Source |

The reason for yet another post in the series is that Trisha Thadani, Rachel Lerman, Imogen Piper, Faiz Siddiqui and Irfan Uraizee of the Washington Post have published an extraordinarily detailed forensic analysis of the first widely-publicized fatal Autopilot crash in The final 11 seconds of a fatal Tesla Autopilot crash. This was the crash in which Autopilot failed to see a semi-trailer stopped across its path and decapitated the driver.

Below the fold I comment on the details their analysis reveals.

Thursday, October 19, 2023

The Invisible Hand Of The Market

In Not "Sufficiently Decentralized I explained how the SEC's William Hinman kneecapped his agency's ability to regulate Bitcoin and Ethereum, handing the baton to the CFTC. Matt Levine explains the result:

The regulatory situation in the US is that there are exchange-traded funds that allow people to speculate on Bitcoin (and Ether), but those funds hold Bitcoin (or Ether) futures, not actual Bitcoins. The US Securities and Exchange Commission has, so far, declined to approve spot Bitcoin ETFs (funds that just hold Bitcoins). It has said that this is because the spot Bitcoin market is largely unregulated and so there is a risk of manipulation, whereas Bitcoin futures trade on regulated US exchanges and so are safer. This has always struck me as incoherent (manipulating the spot market also manipulates the futures), and in August a federal appeals court ruled that it was “arbitrary and capricious,” which probably means that the SEC will have to approve spot Bitcoin ETFs pretty soon.Below the fold I discuss an $80M demonstration of the "risk of manipulation".

Tuesday, October 10, 2023

Not "Suffficiently Decentralized"

|

| Mining power 25 June 2018 |

when I look at Bitcoin today, I do not see a central third party whose efforts are a key determining factor in the enterprise. The network on which Bitcoin functions is operational and appears to have been decentralized for some time, perhaps from inception. Applying the disclosure regime of the federal securities laws to the offer and resale of Bitcoin would seem to add little value.[9] And putting aside the fundraising that accompanied the creation of Ether, based on my understanding of the present state of Ether, the Ethereum network and its decentralized structure, current offers and sales of Ether are not securities transactions. And, as with Bitcoin, applying the disclosure regime of the federal securities laws to current transactions in Ether would seem to add little value. Over time, there may be other sufficiently decentralized networks and systems where regulating the tokens or coins that function on them as securities may not be required.Follow me below the fold for both the evidence that Hinman was talking though his hat, and also yet another update on the theme of my Brief Remarks to IOSCO DeFi WG, that successful systems claiming to be decentralized, like Bitcoin and Ethereum, aren't.

Thursday, October 5, 2023

The Oracle Problem

|

| Source |

Overall, we estimate that in 2022, DeFi protocols lost $403.2 million in 41 separate oracle manipulation attacks.What are oracles and how can manipulating them earn an average of $1.1M/day? Below the fold I attempt an explanation.

Wednesday, October 4, 2023

LOCKSS Program Turns 25

Happy 25th Birthday LOCKSS! The fifteen-year retrospective is here, and the twenty-year one is here, in which I wrote:

Happy 25th Birthday LOCKSS! The fifteen-year retrospective is here, and the twenty-year one is here, in which I wrote:Thanks again to the NSF, Sun Microsystems, and the Andrew W. Mellon Foundation for the funding that allowed us to develop the system. Many thanks to the steadfast support of the libraries of the LOCKSS Alliance, and the libraries and publishers of the CLOCKSS Archive, that has sustained it in production. Special thanks to Don Waters for facilitating the program's evolution off grant funding, and to Margaret Kim for the original tortoise logo.Now for some more gratuitous self-promotion. This means:

- I started work on decentralized, permissionless peer-to-peer systems a quarter of a century ago.

- It is two decades since we were awarded an SOSP "Best Paper" for Preserving peer replicas by rate-limited sampled voting.

- Next month it will be a decade since my first post on cryptocurrencies, The Bitcoin vulnerability.

- It is five years since I posted Bitcoin's Academic Pedigree, based on the paper by Arvind Narayanan and Jeremy Clark, showing that all the techniques Satoshi Nakamoto assembled to create Bitcoin were published between 43 and 31 years ago now.

Tuesday, September 26, 2023

The Bitcoin "Lab Leak" Theory

In the late '80s and early '90s electronic cash was a hot topic among cryptographers. People such as David Chaum published extensively in journals such as Springer's Advances in Cryptology. Staff at the US National Security Agency (NSA) were naturally interested in developments in cryptography, so on 18th June 1996 the NSA's Laurie Law, Susan Sabett and Jerry Solinas reviewed the academic literature on electronic cash and published HOW TO MAKE A MINT: THE CRYPTOGRAPHY OF ANONYMOUS ELECTRONIC CASH :

In the late '80s and early '90s electronic cash was a hot topic among cryptographers. People such as David Chaum published extensively in journals such as Springer's Advances in Cryptology. Staff at the US National Security Agency (NSA) were naturally interested in developments in cryptography, so on 18th June 1996 the NSA's Laurie Law, Susan Sabett and Jerry Solinas reviewed the academic literature on electronic cash and published HOW TO MAKE A MINT: THE CRYPTOGRAPHY OF ANONYMOUS ELECTRONIC CASH :This report has surveyed the academic literature for cryptographic techniques for implementing secure electronic cash systems. Several innovative payment schemes providing user anonymity and payment untraceability have been found. Although no particular payment system has been thoroughly analyzed, the cryptography itself appears to be sound and to deliver the promised anonymity.Alas, this understandable effort by NSA staff has become the keystone in a bizarre theory that Satoshi Nakamoto is an alias for the NSA, who developed Bitcoin in secrecy as a "monetary bioweapon" a decade before it somehow leaked and infected the world.

These schemes are far less satisfactory, however, from a law enforcement point of view. In particular, the dangers of money laundering and counterfeiting are potentially far more serious than with paper cash. These problems exist in any electronic payment system, but they are made much worse by the presence of anonymity.

I must apologize that, below the fold, I devote an entire post to this conspiracy theory.

Tuesday, September 19, 2023

IOSCO DeFi WG Report

Below the fold is the discussion of Policy Reccomendations for Decentralized Finance (DeFi): Colsultation Report that in promised in Brief Remarks to IOSCO DeFi WG.

Tuesday, September 12, 2023

Brief Remarks to IOSCO DeFi WG

Earlier this year I was invited to take part in a meeting of the DeFi Working Group of the International Organization of Securities Commissions' Fintech Task Force. IOSCO is the organization that links securities regulators worldwide. The goal of the meeting was to provide input for a follow-up to IOSCO's Decentralized Finance Report from March 2022. I was asked to keep this confidential until the report was published, which has now happened; Policy Recommendations for Decentralized Finance (DeFi): Consultation Report.

Below the fold is the text of my brief introductory remarks with links to the sources. I will discuss the report in a subsequent post once I have studied it.

Below the fold is the text of my brief introductory remarks with links to the sources. I will discuss the report in a subsequent post once I have studied it.

Tuesday, September 5, 2023

Microsoft Keys

Back in 2021 I gave a Talk At Berkeley's Information Access Seminar that summarized two long posts from two years before that:

On Friday 25th Dan Goodin had two posts documenting that even the biggest software companies haven't fixed the problems I was talking about:

On Friday 25th Dan Goodin had two posts documenting that even the biggest software companies haven't fixed the problems I was talking about:

- Renegade certificate removed from Windows. Then it returns. Microsoft stays silent.

- Microsoft signing keys keep getting hijacked, to the delight of Chinese threat actors

Tuesday, August 29, 2023

Prof. Hilary Allen On Venture Capital

After writing Predatory Pricing, via David Gerard, I found Prof. Hilary Allen's Interest Rates, Venture Capital, and Financial Stability. In the abstract she writes:

This Article illuminates one path through which the prolonged period of low interest rates from 2009-2021 has impacted financial stability: it traces how yield-seeking behavior in the wake of the Global Financial Crisis and Covid pandemic led to a bubble in the venture capital industry, which in turn spawned a crypto bubble as well as a run on the VC-favored Silicon Valley Bank.Prof. Allen describes yet another of the many ways the current venture capital industry is malfunctioning, and calls for increased oversight of these risks to financial stability. Below the fold I discuss this and one of the papers it cites.

...

It argues for increased monitoring of the venture capital industry by financial stability regulators, given that venture capital is well-positioned to generate asset bubbles now and in the future. More specifically, it argues for more aggressive enforcement of the securities laws to tamp down on the present crypto bubble, as well as for structural separation between crypto and the traditional financial system.

Thursday, August 24, 2023

Techno-feudalism

|

| Source |

Capitalism is the name of a power relationship of the few over the many. It is a hierarchy. It is feudalism liquidified, where money has replaced land as the source of power, but there's still the powerful and there's still the weak, and there's still incredible inequality in the world system.Second, in Autoenshittification Cory Doctorow writes:

In his forthcoming book, Techno Feudalism: What Killed Capitalism, Yanis Varoufakis proposes that capitalism has died – but it wasn't replaced by socialism. Rather, capitalism has given way to feudalism:Below the fold some discussion of this idea.

https://www.penguin.co.uk/books/451795/technofeudalism-by-varoufakis-yanis/9781847927279

Under capitalism, capital is the prime mover. The people who own and mobilize capital – the capitalists – organize the economy and take the lion's share of its returns. But it wasn't always this way: for hundreds of years, European civilization was dominated by rents, not markets.

Thursday, August 17, 2023

Optical Media Durability Update

Five years ago I posted Optical Media Durability and discovered:

It is time again for this annual chore, and yet again this year every single MD5 was successfully verified. Below the fold, the details.

Surprisingly, I'm getting good data from CD-Rs more than 14 years old, and from DVD-Rs nearly 12 years old. Your mileage may vary.Four years ago I repeated the mind-numbing process of feeding 45 disks through the reader and verifying their checksums. Three years ago I did it again, and then again two years ago, and then again a year ago.

It is time again for this annual chore, and yet again this year every single MD5 was successfully verified. Below the fold, the details.

Tuesday, August 8, 2023

Predatory Pricing

Adam Rogers' The dirty little secret that could bring down Big Tech is based on work by Matt Wansley and Sam Weinstein of the Cardozo School of Law, who questioned why the investors in companies such as Uber, Lyft, or WeWork would sink:

billions of dollars of capital into a money-losing business where the path to profitability wasn't clear?The answer is the remarkable effectiveness of predatory pricing at making money for VCs and founders. Rogers writes:

Wansley and Weinstein — who, not coincidentally, used to work in antitrust enforcement at the Justice Department — set out to change that. In a new paper titled "Venture Predation," the two lawyers make a compelling case that the classic model of venture capital — disrupt incumbents, build a scalable platform, move fast, break things — isn't the peak of modern capitalism that Silicon Valley says it is. According to this new thinking, it's anticapitalist. It's illegal. And it should be aggressively prosecuted, to promote free and fair competition in the marketplace.Below the fold I discuss Wansley and Weinstein's paper and relate it to events in the cryptosphere.

Thursday, August 3, 2023

Video Game History

Arguably, video games have become the most important entertainment medium. 2022 US video game revenue topped $85B, compared with global movie industry revenue of $76.7B. Game history is thus an essential resource for scholars of culture, but the industry's copyright paranoia means they have little access to it.

In 87% Missing: the Disappearance of Classic Video Games Kelsey Lewin of the Video Game History Foundation describes their recent study in cooperation with the Software Preservation Network, published by Phil Salvador as Survey of the Video Game Reissue Market in the United States. The report's abstract doesn't mince words:

|

| Salvador Table 1 |

Only 13 percent of classic video games published in the United States are currently in release (n = 1500, ±2.5%, 95% CI). These low numbers are consistent across platform ecosystems and time periods. Troublingly, the reissue rate drops below 3 percent for games released prior to 1985—the foundational era of video games—indicating that the interests of the marketplace may not align with the needs of video game researchers. Our experiences gathering data for this study suggest that these problems will intensify over time due to a low diversity of reissue sources and the long-term volatility of digital game storefronts.Below the fold I discuss some of the details.

Tuesday, August 1, 2023

This Could Be An Issue

Allyson Versprille's Wall Street Banks Side With Nemesis Elizabeth Warren on Crypto Crackdown describes this improbable occurrence thus:

Follow me below the fold for the sting in this bill's tail

The Bank Policy Institute, a trade group for lenders that Warren often blasts, threw its weight behind bipartisan legislation that the Massachusetts Democrat and three of her Senate colleagues reintroduced this week. The bill aims to force the crypto industry to comply with tougher rules for combating money laundering and terrorism financing.The three colleagues are Joe Manchin (D-WV), Roger Marshall (R-KS) and Lindsey Graham (R-SC). It is worth noting that Senators Warren and Graham also have a bill to tighten regulation of big tech, described in their "Guest Essay" for the New York Times entitled When It Comes to Big Tech, Enough Is Enough.

“The existing anti-money laundering and Bank Secrecy Act framework must account for digital assets, and we look forward to engaging in this process to defend our nation’s financial system against illicit finance in all its forms,” BPI said in a statement.

Follow me below the fold for the sting in this bill's tail

Tuesday, July 25, 2023

Anti-trust

Technology markets, and others that share their strong economies of scale, naturally tend to evolve into oligopolies, if not outright monopolies. Brian Arthur described the mechanism in 1994's Increasing Returns and Path Dependence in the Economy. This tendency has been supercharged ever since Richard Posner and George Stigler of the Chicago School got the Reagan administration to hamstring anti-trust enforcement. We see the result in technology and many other markets, dominated by a few giant companies devoted, in Cory Doctorow's memorable coinage, to enshittification:

Here is how platforms die: first, they are good to their users; then they abuse their users to make things better for their business customers; finally, they abuse those business customers to claw back all the value for themselves. Then, they die.This process applies more broadly than just to platforms, except the part about dying. Below the fold I discuss a recent development that provides an opportunity for you to take action to push back against it.

Tuesday, July 18, 2023

NY Fed: Metastablecoins

|

| Stablecoin circulation |

We think that willingness to absorb losses, even though USDT is fully collateralized and has an overnight liquidity buffer that exceeds most prime funds, suggests the token might be prone pre-emptive runs. Holders with immediate liquidity demands have an incentive (or first-mover advantage) to rush to sell in the secondary market before the supply of tokens from other liquidity-seekers picks up. The fear that USDT might not be able to maintain the peg may drive runs regardless of its actual capacity to support redemptions based on the liquidity of its collateral.Now Kenechukwu Anadu et al of the NY Federal Reserve have a deep dive into the events of May 2022 entitled Runs on Stablecoins. Below the fold I have some comments.

Tuesday, July 4, 2023

Blog on vacation

I have a stack of books that I set aside for summer reading, so this blog is on vacation for two weeks so I can at least reduce the height of the stack.

Tuesday, June 27, 2023

The Philosopher of Palo Alto

I just finished reading John Tinnell's The Philosopher of Palo Alto. Based on Stanford Library's extensive archive of Mark Weiser's papers, and interviews with many participants, it is an impressively detailed and, as far as I can tell, accurate account of the "ubiquitous computing" work he drove at Xerox PARC. I strongly recommend reading it. Tinnell covers Weiser's life story to his death at age 46 in 1999, the contrast between his work and that at Nick Negroponte's MIT Media Lab, and the ultimate failure of his vision.

I just finished reading John Tinnell's The Philosopher of Palo Alto. Based on Stanford Library's extensive archive of Mark Weiser's papers, and interviews with many participants, it is an impressively detailed and, as far as I can tell, accurate account of the "ubiquitous computing" work he drove at Xerox PARC. I strongly recommend reading it. Tinnell covers Weiser's life story to his death at age 46 in 1999, the contrast between his work and that at Nick Negroponte's MIT Media Lab, and the ultimate failure of his vision.Tinnell quotes Lucy Suchman's critique of Weiser's approach to innovation:

Under this approach, Suchman claimed, a lab "[provided] distance from practicalities that must eventually be faced" — but facing up to those practicalities was left up to staff in some other department.To be fair, I would say the same criticism applied to much of the Media Labs work too.

As I was at the time a member of "staff in some other department" at Sun Microsystems and then Nvidia, below the fold I discuss some of the "practicalities" that should have been faced earlier rather than later or not at all.

Tuesday, June 20, 2023

2023 Storage Roundup

It is time for another roundup of news from the storage industry, so follow me below the fold.

Thursday, June 15, 2023

Code Isn't Law: An Analogy

|

| Source |

Prosecutors claim Eisenberg manipulated Mango Markets futures contracts on Oct. 11, when he drove up the price of swaps by 1,300%. He used them to borrow about $110 million of cryptocurrency from other Mango depositors, the US alleges.Below the fold, my analogy

Four days later he posted on Twitter: “I was involved with a team that operated a highly profitable trading strategy last week.” He also said he believed “all of our actions were legal open market actions, using the protocol as designed.”

Tuesday, June 13, 2023

A Local Large Language Model

I wanted to see what all the hype around Large Language Models (LLMs) amounted to, but the privacy terms in the EULA for Bard were completely unacceptable. So when I read Dylan Patel and Afzal Ahmad's We Have No Moat: And neither does OpenAI:

Open-source models are faster, more customizable, more private, and pound-for-pound more capable. They are doing things with $100 and 13B params that we struggle with at $10M and 540B. And they are doing so in weeks, not months.I decided to try open source LLMs for myself, since as they run locally the privacy risk is mitigated. Below the fold I tell the story so far; I will update it as I make progress.

Tuesday, June 6, 2023

Flash Loans

I have been generally skeptical of claims that blockchain technology and cryptocurrencies are major innovations. Back in 2017 Arvind Narayanan and Jeremy Clark published Bitcoin's Academic Pedigree, showing that Satoshi Nakamoto assembled a set of previously published components in a novel way to create Bitcoin. Essentially the only innovation among the components was the Longest Chain Rule.

But, for good or ill, there is at least one genuinely innovative feature of the cryptocurrency ecosystem and in Flash loans, flash attacks, and the future of DeFi Aidan Saggers, Lukas Alemu and Irina Mnohoghitnei of the Bank of England provide an excellent overview of it. They:

But, for good or ill, there is at least one genuinely innovative feature of the cryptocurrency ecosystem and in Flash loans, flash attacks, and the future of DeFi Aidan Saggers, Lukas Alemu and Irina Mnohoghitnei of the Bank of England provide an excellent overview of it. They:

analysed the Ethereum blockchain (using Alchemy’s archive node) and gathered every transaction which has utilised the ‘FlashLoan’ smart contract provided by DeFi protocol Aave V1 and V2. The Aave protocol, one of the largest DeFi liquidity providers, popularised flash loans and is often credited with their design. Using this data we were able to gather 60,000 unique transactions from Aave’s flash loan inception through to 2023Below the fold I discuss their overview and some of the many innovative ways in which flash loans have been used.

Tuesday, May 30, 2023

Be Careful What You Vote For

One big idea in cryptocurrencies is attempting to achieve decentralization through "governance tokens" whose HODLers can control a Decentralized Autonomous Organization (DAO) by voting upon proposed actions. Of course, this makes it blindingly obvious that the "governance tokens" are securities and thus regulated by the SEC. But even apart from that problem recent events, culminating in "little local difficulties" for Tornado Cash, demonstrate that there are several others.

Below the fold I look at these problems.

Below the fold I look at these problems.

Tuesday, May 23, 2023

Fractional Reserve Crypto-Banking

|

| Source |

Nakamoto's goal for Bitcoin was:

What is needed is an electronic payment system based on cryptographic proof instead of trust, allowing any two willing parties to transact directly with each other without the need for a trusted third party.Below the fold I examine how this earliest cryptocurrency story changed.

Thursday, May 18, 2023

Lies, Damned Lies, & A16Z's Statistics

This post is a quick shout-out to two excellent pieces documenting the corruption of the venture capital industry:

- Molly White's Narrative over numbers: Andreessen Horowitz's State of Crypto report

- Matt Levine's Crypto Had Its Bank Runs Too

Thursday, May 11, 2023

Flooding The Zone With Shit

|

| Tom Cowap CC-BY-SA 4.0 |

My immediate reaction to the news of ChatGPT was to tell friends "at last, we have solved the Fermi Paradox"[1]. It wasn't that I feared being told "This mission is too important for me to allow you to jeopardize it", but rather that I assumed that civilizations across the galaxy evolved to be able to implement ChatGPT-like systems, which proceeded to irretrievably pollute their information environment, preventing any further progress.

Below the fold I explain why my on-line experience, starting from Usenet in the early 80s, leads me to believe that humanity's existential threat from these AIs comes from Steve Bannon and his ilk flooding the zone with shit[2].

Thursday, May 4, 2023

The Cryptocurrency Use Case

|

| Paul Le Roux By Farantgh - Own work |

One of the (many) times I have been heckled during a panel on crypto was when I argued that it shouldn’t be thought of as money. The only reason to use it other than for speculation, I said, was to buy drugs on the internet. This was a preposterous idea, the heckler retorted; crypto is used for so much more than that.and ends:

So in a funny way, my heckler was right: crypto isn’t just used for speculating on and buying drugs on the internet: it’s used for much murkier criminal activities, too.Below the fold I discuss the details of the "murkier criminal activities".

Thursday, April 27, 2023

Crypto: My Part In Its Downfall

I was asked to talk about cryptocurrencies to the 49th Asilomar Microcomputer Workshop. I decided that my talk would take the form of a chronology, so I based the title on a book by the late, great comic Spike Milligan. It became Crypto: My Part In Its Downfall1.

I was asked to talk about cryptocurrencies to the 49th Asilomar Microcomputer Workshop. I decided that my talk would take the form of a chronology, so I based the title on a book by the late, great comic Spike Milligan. It became Crypto: My Part In Its Downfall1.Below the fold is the text, with links to the sources.

Thursday, April 20, 2023

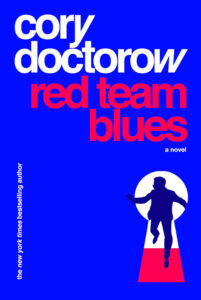

Red Team Blues

Since I started my career as a Cassandra of Cryptocurrencies I haven't had much time to read novels; I have too much fun reading about the unfolding disasters. But I did enjoy Cory Doctorow's Little Brother and Homeland, so when he asked me to review Red Team Blues I sat down and read it.

Since I started my career as a Cassandra of Cryptocurrencies I haven't had much time to read novels; I have too much fun reading about the unfolding disasters. But I did enjoy Cory Doctorow's Little Brother and Homeland, so when he asked me to review Red Team Blues I sat down and read it.The first thing to catch my eye was the dedication to the late, great Dan Kaminsky, a fellow attendee of the Asilomar Microcomputer Workshop and someone I admired.

It is an engrossing read. The action moves swiftly, the plot requires little suspension of disbelief and has plenty of twists to keep you thinking. The interesting parts are the details about how people and their money can be tracked, and how people with a lot can prevent it being tracked. Doctorow gets to cover many of his favorite themes in a fast-moving story about a character approaching retirement. I'm well past that stage, but I understand some of those issues.

The story ends happily because all the bodies that pile up are bad guys. This is necessary since this is apparently the first in a series. I'll definitely read the next one.

You don't need to understand blockchains and cryptocurrencies to enjoy the story, but I do so, below the fold, I can't resist picking some nits with the technical details.

Thursday, April 13, 2023

Compute In Storage

Tobias Mann's Los Alamos Taps Seagate To Put Compute On Spinning Rust describes progress in the concept of computational storage. I first discussed this in my 2010 JCDL keynote, based on 2009's FAWN, the Fast Array of Wimpy Nodes by David Anderson et al from Carnegie-Mellon. Their work started from the observation that moving data from storage to memory for processing by a CPU takes time and energy, and the faster you do it the more energy it takes. So the less of it you do, the better. Below the fold I start from FAWN and end up with the work under way at Los Alamos.

Tuesday, April 4, 2023

How Bubbles Are Blown

Last October I wrote Non-Fungible Token Bubble Lasted 10 Months. The NFT market is still dead, a fossil relic of a massive wave of typical cryptocurrency pump-and-dump schemes and wash trading. But the great thing is that this all happened on a public blockchain, so data palaeontologists have an unrivalled dataset with which to unearth the inner workings of a speculative bubble.

Bryce Elder's What NFT mania can tell us about market bubbles points us to NFT Bubbles in which Andrea Barbon and Angelo Ranaldo do just that. Their abstract states:

Bryce Elder's What NFT mania can tell us about market bubbles points us to NFT Bubbles in which Andrea Barbon and Angelo Ranaldo do just that. Their abstract states:

Our study reveals that agent-level variables, such as investor sophistication, heterogeneity, and wash trading, in addition to aggregate variables, such as volatility, price acceleration, and turnover, significantly predict bubble formation and price crashes. We find that sophisticated investors consistently outperform others and exhibit characteristics consistent with superior information and skills, supporting the narrative surrounding asset pricing bubbles.Below the fold I discuss the details.

Tuesday, March 28, 2023

Two Great Reads

This post is to flag two great posts by authors always worth reading, both related to the sad state of the venture capital industry upon which I have pontificated several times:

- Molly White's The venture capitalist's dilemma.

- Fais Khan's Zero Knowledge Influencer: Are ZKPs Worth the Hype?.

Thursday, March 16, 2023

More Cryptocurrency Gaslighting

Ignacio de Gregorio is a "crypto expert" with 8.5K followers on Medium and he's worried. In The one word that can kill Crypto is back he discusses the New York Attorney General's suit agains KuCoin and, once again, demonstrates how gaslighting is central to the arguments supporting cryptocurrencies. Below the fold I point out the flaws in his argument.

Thursday, March 9, 2023

C720 Linux Update

The three Acer C720 Chromebooks I wrote about in:

The three Acer C720 Chromebooks I wrote about in:- 2014's A Note of Thanks,

- 2017's Travels with a Chromebook,

- and 2021's Chromebook Linux Update

I was becoming a little concerned by the fact that the 5.0-series kernel I was stuck with was getting long in the tooth. So as an experiment I wiped C720 #3 and:

- Installed Mint 21.1 from scratch with LVM and full-disk encryption.

- Installed Mint 21.1 from scratch without full-disk encryption and with encrypted home directory, and updated to the current 5.15.0-67 kernel.

Tuesday, March 7, 2023

On Trusting Trustlessness

Nearly five years ago some bad guys used "administrative backdoors" in a "smart contract" to steal $23.5M from Bancor. In response I wrote DINO and IINO pointing out a fundamental problem with "smart contracts" built on blockchains. The technology was sold as "trustless":

Now, in response to some good guys using an "unknown vulnerability" in a smart contract to recover $140M in coins looted in the Wormhole exploit, Molly White wrote The Oasis "counter-hack" and the centralization of defi on the same topic. Below the fold, I comment on her much better, much more detailed discussion of the implications of "smart contracts" that can beupgraded arbitrarily changed by their owners.

A major misconception about blockchains is that they provide a basis of trust. A better perspective is that blockchains eliminate the need for trust.But the "smart contracts" could either be:

- immutable, implying that you are trusting the developers to write perfect code, which frequently turns out to be a mistake,

- or upgradable, implying that you are trusting those with the keys to the contract, which frequently turns out to be a mistake.

Now, in response to some good guys using an "unknown vulnerability" in a smart contract to recover $140M in coins looted in the Wormhole exploit, Molly White wrote The Oasis "counter-hack" and the centralization of defi on the same topic. Below the fold, I comment on her much better, much more detailed discussion of the implications of "smart contracts" that can be

Tuesday, February 28, 2023

The Center For Gaslighting About Blockchains

| Source |

A year later I am laughing as I read Francesca Maglione’s Princeton Says Crypto Chaos Helps Justify Its Blockchain Center describing their desperate attempts to spin this as a good move. Below the fold I pour scorn on this outbreak of "blockchain is the answer, now what was the question?".

Tuesday, February 14, 2023

Sybil Defense

|

| Source |

- It ignores the fact that decentralization isn't binary, it is a spectrum. Systems claiming decentralization can be characterized by their "Nakamoto coefficient":

The number of entities sufficient to disrupt a blockchain is relatively low: four for Bitcoin, two for Ethereum, and less than a dozen for most PoS networks.

This number varies through time, but for both is almost always between two and five, which is not very "decentralized". Given that the "entities" in question are known to coordinate their behavior off-chain, this number doesn't tell you anything useful about the system. - What calling a system "decentralized" even though it actually isn't does usefully do is to inhibit regulation. It creates the false impression that responsibility for the state and actions of the system is so diffuse that regulators lack a viable traget.

There is a much more useful, completely objective criterion. Participation in a system either is, or is not subject to permission from some authority, and this can be confirmed by the experiment of trying to participate without asking permission.

Permissionless systems can claim some advantages, but they suffer from some serious disadvantages. Chief among them is the need to defend against "Sybil attacks". Below the fold I discuss Sybil attacks, the defense against them, and the implications for the systems that adopt this defense.

Tuesday, February 7, 2023

Economic Incentives

Economic incentives are the glue holding the cryptosphere together. The security of Proof-of-Work blockchains depends upon the cost in hardware and power of an attack being more than the attack could gain. The security of Proof-of-Stake blockchains depends upon an attack reducing the value of the stake. There are economic incentives for market manipulation, pump-and-dump schemes, rug pulls, front-running and many other market behaviors. These are all very effective, but in this post I look at what appears to be a glaring exception to their effectiveness.

Economic incentives are the glue holding the cryptosphere together. The security of Proof-of-Work blockchains depends upon the cost in hardware and power of an attack being more than the attack could gain. The security of Proof-of-Stake blockchains depends upon an attack reducing the value of the stake. There are economic incentives for market manipulation, pump-and-dump schemes, rug pulls, front-running and many other market behaviors. These are all very effective, but in this post I look at what appears to be a glaring exception to their effectiveness.Permissionless systems are less efficient, slower, and vastly more expensive to set up and operate than permissioned systems performing exactly the same task. One would think that the permissioned systems would out-compete them, but in the cryptosphere they don't. Below the fold I attempt to answer the following obvious questions:

- Why are permissionless systems more expensive?

- How large is the investment in avoiding the need for permission?

- Where does the return on this investment come from?

- How large is the return on this investment?

the common meaning of ‘decentralized’ as applied to blockchain systems functions as a veil that covers over and prevents many from seeing the actions of key actors within the system.

Thursday, January 26, 2023

Regulatory Capture In Action

On January 20th, SEC Commissioner Hester Peirce gave a long speech at Duke University entited Outdated: Remarks before the Digital Assets at Duke Conference essentially arguing against doing her job by regulating cryptocurrencies.

Below the fold I point out how she is shilling for the cryptosphere, with a long list of excuses for inaction.

Below the fold I point out how she is shilling for the cryptosphere, with a long list of excuses for inaction.

Tuesday, January 10, 2023

Binance's Time In The Barrel

The bulk of last month's Dominoes was about Binance, the dominant unregulated cryptocurrency exchange, and the risk that in the wake of FTX's collapse it might be the next victim of cryptocurrency contagion. Just as happened with FTX, once the media picked up on reports of problems, further stories came thick and fast. So below the fold are updates on two of the problems facing Binance.

Thursday, January 5, 2023

Matt Levine's "The Crypto Story": Postscript

|

| Source |