The abstract was:

The Internet is suffering an epidemic of supply chain attacks, in which a trusted supplier of content is compromised and delivers malware to some or all of their clients. The recent SolarWinds compromise is just one glaring example. This talk reviews efforts to defend digital supply chains.Below the fold, the text of the talk with links to the sources.

SolarWinds, and many other recent system and network compromises have been supply chain attacks. These are extremely efficient, because unlike one-at-a-time attacks such as phishing, they provide a built-in mass deployment mechanism. A single compromise of SolarWinds infected at least 18,000 networks. Clearly, the vendors' security practices, and their vendors' security practices, and so on ad infinitum are important, but the sad truth is that current digital supply chain technologies are incapable of mitigating the inevitable security lapses along the chain.

This talk reviews the efforts to defend supply chains that deliver digital content, such as software. But lets start with a simpler case, web pages.

Web Page Supply Chain

How do I know that I'm talking to the right Web site? Because there's a closed padlock icon in the URL bar, right?[Slide 1]

Mozilla says:

A green padlock (with or without an organization name) indicates that:NB - this is misleading!

- You are definitely connected to the website whose address is shown in the address bar; the connection has not been intercepted.

- The connection between Firefox and the website is encrypted to prevent eavesdropping.

The padlock icon appears when the browser has validated that the connection to the URL in the URL bar supplied a certificate for the site in question carrying a signature chain ending in one of the root certificates the browser trusts. Browsers come with a default list of root certificates from Certificate Authorities (CAs). My Firefox trusts 140 certificates from 71 different organizations including, for example, Amazon and Google, but also Chunghwa Telecom Co., Ltd. and the Dutch government. Why is this list a problem?

- The browser trusts all of them equally.

- The browser trusts CAs that the CAs on the list delegate trust to. Back in 2010, the EFF found more than 650 organizations that Internet Explorer and Firefox trusted.

- Commercial CAs on the list, and CAs they delegate to, have regularly been found to be issuing false or insecure certificates.

One of these trusted organizations is the Internet Security Research Group, a not-for-profit organization hosted by the Linux Foundation and sponsored by many organizations including Mozilla and the EFF, which has greatly improved the information hygiene of the Web through a program called Let's Encrypt. This has provided over 225 million Web sites with free certificates carrying a signature chain rooted in a certificate that almost all browsers trust. My blog's certificate is one of them, as you can see by clicking on the padlock icon.

[Slide 3]

Barysevich identified four such sellers of counterfeit certificates since 2011. Two of them remain in business today. The sellers offered a variety of options. In 2014, one provider calling himself C@T advertised certificates that used a Microsoft technology known as Authenticode for signing executable files and programming scripts that can install software. C@T offered code-signing certificates for macOS apps as well. ... "In his advertisement, C@T explained that the certificates are registered under legitimate corporations and issued by Comodo, Thawte, and Symantec—the largest and most respected issuers,"

Dan Goodin One-stop counterfeit certificate shops for all your malware-signing needs

Abuse of the trust users place in CAs is routine:

In one case, a prominent Dutch CA (DigiNotar) was compromised and the hackers were able to use the CA’s system to issue fake SSL certificates. The certificates were used to impersonate numerous sites in Iran, such as Gmail and Facebook, which enabled the operators of the fake sites to spy on unsuspecting site users. ... More recently, a large U.S.-based CA (TrustWave) admitted that it issued subordinate root certificates to one of its customers so the customer could monitor traffic on their internal network. Subordinate root certificates can be used to create SSL certificates for nearly any domain on the Internet. Although Trustwave has revoked the certificate and stated that it will no longer issue subordinate root certificates to customers, it illustrates just how easy it is for CAs to make missteps and just how severe the consequences of those missteps might be.In 2018 Sennheiser provided another example:

The issue with the two HeadSetup apps came to light earlier this year when German cyber-security firm Secorvo found that versions 7.3, 7.4, and 8.0 installed two root Certification Authority (CA) certificates into the Windows Trusted Root Certificate Store of users' computers but also included the private keys for all in the SennComCCKey.pem file.Certificates depend on public-key cryptography, which splits keys into public/private key pairs. Private keys can decrypt text encrypted by the public key, and vice versa. The security of the system depends upon private keys being kept secret. This poses two problems:

- As the Sennheiser example shows, it is easy for the private keys to leak. Another common way for them to leak is for a server to be compromised. For the server to be able to verify its identity, and thus unlock the padlock, the private key needs to be stored on the server in cleartext. So an intruder can steal it to impersonate the server.

- There is no alarm bell or notification to the owner or affected users when a private key leaks. So, as in the Sennheiser case, the attacker may be able to use it unimpeded for a long time, until some security researcher notices some anomaly.

In a report published today, Secorvo researchers published proof-of-concept code showing how trivial would be for an attacker to analyze the installers for both apps and extract the private keys.Cimpanu also reports on a more recent case:

Making matters worse, the certificates are also installed for Mac users, via HeadSetup macOS app versions, and they aren't removed from the operating system's Trusted Root Certificate Store during current HeadSetup updates or uninstall operations.

...

Sennheiser's snafu ... is not the first of its kind. In 2015, Lenovo shipped laptops with a certificate that exposed its private key in a scandal that became known as Superfish. Dell did the exact same thing in 2016 in a similarly bad security incident that became known as eDellRoot.

Under the guise of a "cybersecurity exercise," the Kazakhstan government is forcing citizens in its capital of Nur-Sultan (formerly Astana) to install a digital certificate on their devices if they want to access foreign internet services.This type of “mistake” allows attackers to impersonate any Web site to affected devices.

Once installed, the certificate would allow the government to intercept all HTTPS traffic made from users' devices via a technique called MitM (Man-in-the-Middle).

CAs are supposed to issue three grades of certificate based on increasingly rigorous validation:

- Domain Validated (DV) certificates verify control over the DNS entries, email and Web content of the specified domain. They can be issued via automated processes, as with Let's Encrypt.

- Organization Validated (OV) certificates are supposed to verify the legal entity behind the DV-level control of the domain, but in practice are treated the same as DV certificates.

- Extended Validation (EV) certificates require "verification of the requesting entity's identity by a certificate authority (CA)". Verification is supposed to be an intrusive, human process.

But, as can be seen from the advert, the Extended Verification process is far from fool-proof. This lack of trustworthiness of CAs should not be a surprise. Six years ago Security Collapse in the HTTPS Market, a fascinating analysis of the (lack of) security on the Web from an economic rather than a technical perspective by Axel Arnbak et al from Amsterdam and Delft Universities showed that CAs lack incentives to be trustworthy. They write:

The reason for the weakest-link is:

- Information asymmetry prevents buyers from knowing what CAs are really doing. Buyers are paying for the perception of security, a liability shield, and trust signals to third parties. None of these correlates verifiably with actual security. Given that CA security is largely unobservable, buyers’ demands for security do not necessarily translate into strong security incentives for CAs.

- Negative externalities of the weakest-link security of the system exacerbate these incentive problems. The failure of a single CA impacts the whole ecosystem, not just that CA’s customers. All other things being equal, these interdependencies undermine the incentives of CAs to invest, as the security of their customers depends on the efforts of all other CAs.

A crucial technical property of the HTTPS authentication model is that any CA can sign certificates for any domain name. In other words, literally anyone can request a certificate for a Google domain at any CA anywhere in the world, even when Google itself has contracted one particular CA to sign its certificate.This "technical property" is actually important, it is what enables a competitive market of CAs. Symantec in particular has exploited it wholesale:

Google's investigation revealed that over a span of years, Symantec CAs have improperly issued more than 30,000 certificates. ... They are a major violation of the so-called baseline requirements that major browser makers impose of CAs as a condition of being trusted by major browsers.But Symantec has suffered no effective sanctions because they are too big to fail:

Symantec's repeated violations underscore one of the problems Google and others have in enforcing terms of the baseline requirements. When violations are carried out by issuers with a big enough market share they're considered too big to fail. If Google were to nullify all of the Symantec-issued certificates overnight, it might cause widespread outages.My Firefox still trusts Symantec root certificates. Because Google, Mozilla and others prioritize keeping the Web working over keeping it secure, deleting misbehaving big CAs from trust lists won't happen. When Mozilla writes:

You are definitely connected to the website whose address is shown in the address bar; the connection has not been intercepted.they are assuming a world of honest CAs that isn't this world. If you have the locked padlock icon in your URL bar, you are probably talking to the right Web site, but there is a chance you aren't.

[Slide 5]

Recent data from anti-phishing company PhishLabs shows that 49 percent of all phishing sites in the third quarter of 2018 bore the padlock security icon next to the phishing site domain name as displayed in a browser address bar. That’s up from 25 percent just one year ago, and from 35 percent in the second quarter of 2018.

Brian Krebs Half of all Phishing Sites Now Have the Padlock

Building on earlier work by Wendlandt et al, Moxie Marlinspike, the EFF and others, in 2012 Google started work on an approach specified in RFC6962, and called Certificate Transparency (CT). The big difference from earlier efforts, which didn't require cooperation from website owners and CAs, was that Google's did require cooperation and they had enough leverage to obtain it:

[Slide 6]

Google's Certificate Transparency project fixes several structural flaws in the SSL certificate system, which is the main cryptographic system that underlies all HTTPS connections. These flaws weaken the reliability and effectiveness of encrypted Internet connections and can compromise critical TLS/SSL mechanisms, including domain validation, end-to-end encryption, and the chains of trust set up by certificate authorities. If left unchecked, these flaws can facilitate a wide range of security attacks, such as website spoofing, server impersonation, and man-in-the-middle attacks.

Certificate Transparency helps eliminate these flaws by providing an open framework for monitoring and auditing SSL certificates in nearly real time. Specifically, Certificate Transparency makes it possible to detect SSL certificates that have been mistakenly issued by a certificate authority or maliciously acquired from an otherwise unimpeachable certificate authority. It also makes it possible to identify certificate authorities that have gone rogue and are maliciously issuing certificates.

Certificate Transparency helps eliminate these flaws by providing an open framework for monitoring and auditing SSL certificates in nearly real time. Specifically, Certificate Transparency makes it possible to detect SSL certificates that have been mistakenly issued by a certificate authority or maliciously acquired from an otherwise unimpeachable certificate authority. It also makes it possible to identify certificate authorities that have gone rogue and are maliciously issuing certificates.

Certificate Transparency

The basic idea is to accompany the certificate with a hash of the certificate signed by a trusted third party, attesting that the certificate holder told the third party that the certificate with that hash was current. Thus in order to spoof a service, an attacker would have to both obtain a fraudulent certificate from a CA, and somehow persuade the third party to sign a statement that the service had told them the fraudulent certificate was current. Clearly this is:

- more secure than the current situation, which requires only compromising a CA, and:

- more effective than client-only approaches, which can detect that a certificate has changed but not whether the change was authorized.

In order to improve the security of Extended Validation (EV) certificates, Google Chrome requires Certificate Transparency (CT) compliance for all EV certificates issued after 1 Jan 2015.Clients now need two lists of trusted third parties, the CAs and the sources of CT attestations. The need for these trusted third parties is where the blockchain enthusiasts would jump in and claim (falsely) that using a blockchain would eliminate the need for trust. But CT has a much more sophisticated approach, Ronald Reagan's "Trust, but Verify". In the real world it isn't feasible to solve the problem of untrustworthy CAs by eliminating the need for trust. CT's approach instead is to provide a mechanism by which breaches of trust, both by the CAs and by the attestors, can be rapidly and unambiguously detected.

[Slide 7]

Here is a brief overview of how CT works to detect breaches of trust. The system has the following components:

- Logs, to which CAs report their current certificates, and from which they obtain attestations, called Signed Certificate Timestamps (SCTs), that owners can attach to their certificates. Clients can verify the signature on the SCT, then verify that the hash it contains matches the certificate. If it does, the certificate was the one that the CA reported to the log, and the owner validated. It is envisaged that there will be tens but not thousands of logs; Chrome currently trusts 46 logs operated by 11 organizations. Each log maintains a Merkle tree data structure of the certificates for which it has issued SCTs.

- Monitors, which periodically download all newly added entries from the logs that they monitor, verify that they have in fact been added to the log, and perform a series of validity checks on them. They also thus act as backups for the logs they monitor.

- Auditors, which use the Merkle tree of the logs they audit to verify that certificates have been correctly appended to the log, and that no retroactive insertions, deletions or modifications of the certificates in the log have taken place. Clients can use auditors to determine whether a certificate appears in a log. If it doesn't, they can use the SCT to prove that the log misbehaved.

[Slide 8]

Certificate Transparency Architecture:

- The certificate data is held by multiple independent services.

- They get the data directly from the source, not via replication from other services.

- Clients access the data from a random selection of the services.

- There is an audit process continually monitoring the services looking for inconsistencies.

These are all also features of the protocol underlying the LOCKSS digital preservation system, published in 2003. In both cases, the random choice among a population of independent services makes life hard for attackers. If they are to avoid detection, they must compromise the majority of the services, and provide correct information to auditors while providing false information to victims.

Looking at the list of logs Chrome currently trusts, it is clear that almost all are operated by CAs themselves. Assuming that each monitor at each CA is monitoring some of the other logs as well as the one it operates, this does not represent a threat, because misbehavior by that CA would be detected by other CAs. A CA's monitor that was tempted to cover up misbehavior by a different CA's log it was monitoring would risk being "named and shamed" by some other CA monitoring the same log, just as the misbehaving CA would be "named and shamed".

It is important to observe that, despite the fact that CAs operate the majority of the CT infrastructure, its effectiveness in disciplining CAs is not impaired. All three major CAs have suffered reputational damage from recent security failures, although because they are "too big to fail" this hasn't impacted their business much. However, as whales in a large school of minnows it is in their interest to impose costs (for implementing CT) and penalties (for security lapses) on the minnows. Note that Google was sufficiently annoyed with Symantec's persistent lack of security that it set up its own CA. The threat that their business could be taken away by the tech oligopoly is real, and cooperating with Google may have been the least bad choice. Because these major corporations have an incentive to pay for the CT infrastructure, it is sustainable in a way that a market of separate businesses, or a permissionless blockchain supported by speculation in a cryptocurrency would not be.

Fundamentally, if applications such as CT attempt to provide absolute security they are doomed to fail, and their failures will be abrupt and complete. It is more important to provide the highest level of security compatible with resilience, so that the inevitable failures are contained and manageable. This is one of the reasons why permissionless blockchains, subject to 51% attacks, and permissioned blockchains, with a single, central point of failure, are not suitable.

Software Supply Chain

[Slide 9]

When the mass compromise came to light last month, Microsoft said the hackers also stole signing certificates that allowed them to impersonate any of a target’s existing users and accounts through the Security Assertion Markup Language. Typically abbreviated as SAML, the XML-based language provides a way for identity providers to exchange authentication and authorization data with service providers.

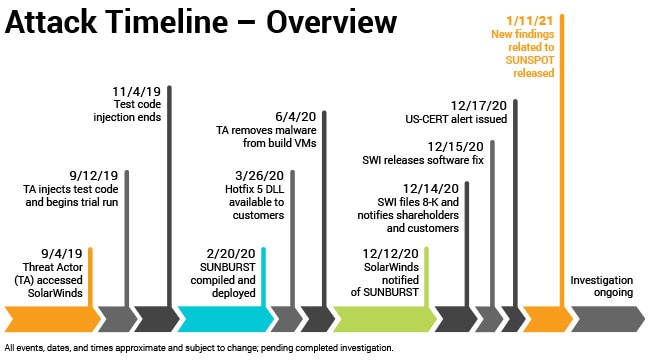

The full impact of the recent compromise of SolarWind's Orion network management software will likely never be known, It affected at least 18,000 networks, including Microsoft's and:

the Treasury Department, the State Department, the Commerce Department, the Energy Department and parts of the PentagonIt was not detected by any of the US government's network monitoring systems, but by FireEye, a computer security company that was also a victim. But for a mistake by the attackers at FireEye it would still be undetected. It was an extremely sophisticated attack, which has rightfully gained a lot of attention.

To understand how defenses against attacks like this might work, it is first necessary to understand how the supply chain that installs and updates the software on your computer works. I'll use apt, the system used by Debian Linux and its derivatives, as the example.

A system running Debian or another APT-based Linux distribution runs software it received in "packages" that contain the software files, and metadata that includes dependencies. Their hashes can be verified against those in a release file, signed by the distribution publisher. Packages come in two forms, source and compiled. The source of a package is signed by the official package maintainer and submitted to the distribution publisher. The publisher verifies the signature and builds the source to form the compiled package, whose hashes are then included in the release file.

The signature on the source package verifies that the package maintainer approves this combination of files for the distributor to build. The signature on the release file verifies that the distributor built the corresponding set of packages from approved sources and that the combination is approved for users to install.

[Slide 10]

There are thus two possible points of entry for an attacker:

- They could compromise the developer, so that the signed source code files received by the distributor contained malware (type A),

- Or they could compromise the distributor, so that the package whose hash was in the signed release file did not reflect the signed source code, but contained malware (type B).

An example of a type A attack occurred in November 2018. Dan Goodin reported that:

The malicious code was inserted in two stages into event-stream, a code library with 2 million downloads that's used by Fortune 500 companies and small startups alike. In stage one, version 3.3.6, published on September 8, included a benign module known as flatmap-stream. Stage two was implemented on October 5 when flatmap-stream was updated to include malicious code that attempted to steal bitcoin wallets and transfer their balances to a server located in Kuala Lumpur.How were the attackers able to do this? Goodin explains:

According to the Github discussion that exposed the backdoor, the longtime event-stream developer no longer had time to provide updates. So several months ago, he accepted the help of an unknown developer. The new developer took care to keep the backdoor from being discovered. Besides being gradually implemented in stages, it also narrowly targeted only the Copay wallet app. The malicious code was also hard to spot because the flatmap-stream module was encrypted.All that was needed to implement this type A attack was e-mail and github accounts, and some social engineering.

Dan Goodin describes a simple Type B attack in New supply chain attack uses poisoned updates to infect gamers’ computers:

In a nutshell, the attack works this way: on launch, Nox.exe sends a request to a programming interface to query update information. The BigNox API server responds with update information that includes a URL where the legitimate update is supposed to be available. Eset speculates that the legitimate update may have been replaced with malware or, alternatively, a new filename or URL was introduced.[Slide 11]

Malware is then installed on the target’s machine. The malicious files aren’t digitally signed the way legitimate updates are. That suggests the BigNox software build system isn’t compromised; only the systems for delivering updates are. The malware performs limited reconnaissance on the targeted computer. The attackers further tailor the malicious updates to specific targets of interest.

The SolarWinds attackers tried but failed to penetrate the network of Crowdstrike, another computer security company. SUNSPOT: An Implant in the Build Process, Crowdstrike's analysis of the attack, reveals the much greater sophistication of this Type B attack. Once implanted in SolarWinds' build system:

- SUNSPOT runs once a second scanning for instances of MsBuild.exe, the tool used to build the target software.

- If SUNSPOT finds an MsBuild.exe, it next locates the directory in which the build is running.

- Then SUNSPOT checks whether what is being built is the target software.

- If it is, SUNSPOT checks whether the target source file has changed.

- If it hasn't, SUNSPOT carefully substitutes the modified source file for the target source file.

- SUNSPOT waits until the build completes, then carefully restores the target source file and erases the traces of its work.

Microsoft's analysis reveals a lot more sophistication of the attacker's operations once they had penetrated the network:

[Slide 12]

Each Cobalt Strike DLL implant was prepared to be unique per machine and avoided at any cost overlap and reuse of folder name, file name, export function names, C2 domain/IP, HTTP requests, timestamp, file metadata, config, and child process launched. This extreme level of variance was also applied to non-executable entities, such as WMI persistence filter name, WMI filter query, passwords used for 7-zip archives, and names of output log files.

How could software supply chains be enhanced to resist these attacks? In an important paper entitled Software Distribution Transparency and Auditability, Benjamin Hof and Georg Carle from TU Munich:

- Describe how APT works to maintain up-to-date software on clients by distributing signed packages.

- Review previous efforts to improve the security of this process.

- Propose to enhance APT's security by layering a system similar to Certificate Transparency (CT) on top.

- Detail the operation of their systems' logs, auditors and monitors, which are similar to CT's in principle but different in detail.

- Describe and measure the performance of an implementation of their layer on top of APT using the Trillian software underlying some CT implementations.

As regards the SolarWinds attack, there are two important "missing pieces" in their system, and all the predecessors. Each is the subject of a separate effort:

[Slide 13]

- Reproducible Builds.

- Bootstrappable Compilers.

Suppose SolarWinds had been working in Hof and Carle's system. They would have signed their source code, built it, and signed the resulting binaries. The attackers would have arranged that the source that was built was not the source that SolarWinds signed, but SolarWinds would not have known that. So the signatures on both the unmodified source and the modified binaries would appear valid in the logs, but the binaries would be malign.

The problem is that the connection between the source and the binaries rests on an assumption that the distributor's build environment has not been compromised - i.e. no type B attack. As with the multiple logs of CT, what is needed is multiple independent builds of the signed source. Unless all of the independent build environments are compromised, a compromised build will differ from the others because it contains malware.

This is a great idea, but in practice it is very hard to achieve for both technical and organizational reasons:

- The first technical reason is that in general, building the same source twice results in different binaries. Compiler and linker output typically contains timestamps, temporary file names, and other sources of randomness. The build system needs to be reproducible.

- The second technical reason is that, in order to be reproducible, the multiple independent builds have to use the same build environment. So each of the independent build environments will have the same vulnerabilities, allowing for the possibility that the attacker could compromise them all.

- The organizational reason is that truly independent builds can only be done in an open source environment in which anyone, and in particular each of the independent builders, can access the source code.

- Alice, a package developer who is blackmailed to distribute binaries that don't match the public source (a Type A attack).

- Bob, a build farm sysadmin whose personal computer has been compromised, leading to a compromised build toolchain in the build farm that inserts backdoors into the binaries (a Type B attack).

- Carol, a free software enthusiast who distributes binaries to friends. An evil maid attack has compromised her laptop.

[Slide 14]

Way back in 1974, Paul Karger and Roger Schell discovered a devastating attack against computer systems. Ken Thompson described it in his classic 1984 speech, "Reflections on Trusting Trust." Basically, an attacker changes a compiler binary to produce malicious versions of some programs, INCLUDING ITSELF. Once this is done, the attack perpetuates, essentially undetectably. Thompson demonstrated the attack in a devastating way: he subverted a compiler of an experimental victim, allowing Thompson to log in as root without using a password. The victim never noticed the attack, even when they disassembled the binaries -- the compiler rigged the disassembler, too.

In 2006, Bruce Schneier summarized the message of perhaps the most famous of ACM's annual Turing Award lectures. In this attack, the compromised build environment inserts malware even though it is building the unmodified source code. Unlike the SolarWinds attack, the signatures testifying that the binaries are the output of building the signed source code are correct.

[Slide 15]

This is the motivation for the Bootstrappable Builds project, whose goal is to create a process for building a complete toolchain starting from a "seed" binary that is simple enough to be certified "by inspection". Recently, they achieved a major milestone. Starting from a tiny "seed" binary, they were able to create a working TinyCC compiler for the ARM architecture. Starting from TinyCC, it is possible to build the entire GnuCC toolchain and thus, in principle, a working Linux. There is clearly a long way still to go to a bootstrapped full toolchain proof against Type B attacks.

The event-stream attack can be thought of as the organization-level analog of a Sybil attack on a peer-to-peer system. Creating an e-mail identity is almost free. The defense against Sybil attacks is to make maintaining and using an identity in the system expensive. As with proof-of-work in Bitcoin, the idea is that the white hats will spend more (compute more useless hashes) than the black hats. Even this has limits. Eric Budish's analysis shows that, if the potential gain from an attack on a blockchain is to be outweighed by its cost, the value of transactions in a block must be less than the block reward.

Would a similar defense against "Sybil" type A attacks on the software supply chain be possible? There are a number of issues:

- The potential gains from such attacks are large, both because they can compromise very large numbers of systems quickly (event-stream had 2M downloads), and because the banking credentials, cryptocurrency wallets, and other data these systems contain can quickly be converted into large amounts of cash.

- Thus the penalty for mounting an attack would have to be an even larger amount of cash. Package maintainers would need to be bonded or insured for large sums, which implies that distributions and package libraries would need organizational structures capable of enforcing these requirements.

- Bonding and insurance would be expensive for package maintainers, who are mostly unpaid volunteers. There would have to be a way of paying them for their efforts, at least enough to cover the costs of bonding and insurance.

- Thus users of the packages would need to pay for their use, which means the packages could neither be free, nor open source.

- Which would make implementing the reproducible builds and bootstrapped compilers needed to defend against type B attacks extremely difficult.

It turns out that this talk is timely. Two days ago, Eric Brewer, Rob Pike et al from Google posted Know, Prevent, Fix: A framework for shifting the discussion around vulnerabilities in open source, an important and detailed look at the problem of vulnerabilities in open source and what can be done to reduce them. Their summary is:

It is common for a program to depend, directly or indirectly, on thousands of packages and libraries. For example, Kubernetes now depends on about 1,000 packages. Open source likely makes more use of dependencies than closed source, and from a wider range of suppliers; the number of distinct entities that need to be trusted can be very high. This makes it extremely difficult to understand how open source is used in products and what vulnerabilities might be relevant. There is also no assurance that what is built matches the source code.The bulk of their post addresses improvements to the quality of the development process, with three goals:

Taking a step back, although supply-chain attacks are a risk, the vast majority of vulnerabilities are mundane and unintentional—honest errors made by well-intentioned developers. Furthermore, bad actors are more likely to exploit known vulnerabilities than to find their own: it’s just easier. As such, we must focus on making fundamental changes to address the majority of vulnerabilities, as doing so will move the entire industry far along in addressing the complex cases as well, including supply-chain attacks.

- Know about the vulnerabilities in your software

- Prevent the addition of new vulnerabilities, and

- Fix or remove vulnerabilities.

This is a big task, and currently unrealistic for the majority of open source. Part of the beauty of open source is its lack of constraints on the process, which encourages a wide range of contributors. However, that flexibility can hinder security considerations. We want contributors, but we cannot expect everyone to be equally focused on security. Instead, we must identify critical packages and protect them. Such critical packages must be held to a range of higher development standards, even though that might add developer friction.[Slide 16]

- Define Criteria for “Critical” Open Source Projects that Merit Higher Standards

-

No Unilateral Changes to Critical Software

- Require Code Review for Critical Software

- Changes to Critical Software Require Approval by Two Independent Parties

-

Authentication for Participants in Critical Software

- For Critical Software, Owners and Maintainers Cannot be Anonymous

- Strong Authentication for Contributors of Critical Software

- A Federated Model for Identities

- Notification for Changes in Risk

- Transparency for Artifacts

- Trust the Build Process

Their goals for the "higher development standards" include identifying the important packages that require higher standards, implementing review and signoff of changes by at least two independent developers, "transparency for artifacts", by which they mean reproducible builds, and "trust the build process" which implies a bootstrappable toolchain.

They acknowledge that these are very aggressive goals, because in many ways they cut against the free-wheeling development culture of open source that has sparked its remarkable productivity. If Google were to persuade other major corporations to put significant additional resources of money and manpower into implementing them they would likely succeed. Absent this, the additional load on developers will likely cause resistance.

Ooops! While preparing this talk I completely forgot about Dan Geer et al's important Counting Broken Links: A Quant’s View of Software Supply Chain Security. It is a must-read attempt to quantify the incidence of, and classify the types of, software supply chain attacks. They conclude:

ReplyDelete"First, there is a striking absence of data collection and analysis that would help identify and assess risks associated with these attacks, especially those involving open source software.

...

Second, existing application security products are unable to identify the distinctive characteristics of software supply chain attacks.

...

Third, reducing the software supply chain attack surface also requires adopting existing technologies and processes that provide the information needed to verify the origin and content of source code and binaries, eliminating or mitigating many of the risks of compromise."

Dan Goodin reports on a brilliantly simple supply chain attack in New type of supply-chain attack hit Apple, Microsoft and 33 other companies:

ReplyDelete"The technique was unveiled last Tuesday by security researcher Alex Birsan. His so-called dependency confusion or namespace confusion attack starts by placing malicious code in an official public repository such as NPM, PyPI, or RubyGems. By giving the submissions the same package name as dependencies used by companies such as Apple, Microsoft, Tesla, and 33 other companies, Birsan was able to get these companies to automatically download and install the counterfeit code."

Simon Sharwood reports that Microsoft admits some Azure, Exchange, Intune source code snaffled in SolarWinds schemozzle:

ReplyDelete"Microsoft has admitted that as a result of installing backdoored SolarWinds tools in some parts of its corporate network, portions of its source code was obtained and exfiltrated by parties unknown."

Bruce Schneier's must-read op-ed Why Was SolarWinds So Vulnerable to a Hack? makes an important point:

ReplyDelete"The modern market economy, which aggressively rewards corporations for short-term profits and aggressive cost-cutting, is also part of the problem: Its incentive structure all but ensures that successful tech companies will end up selling unsecure products and services.

Like all for-profit corporations, SolarWinds aims to increase shareholder value by minimizing costs and maximizing profit. The company is owned in large part by Silver Lake and Thoma Bravo, private-equity firms known for extreme cost-cutting.

SolarWinds certainly seems to have underspent on security. The company outsourced much of its software engineering to cheaper programmers overseas, even though that typically increases the risk of security vulnerabilities. For a while, in 2019, the update server’s password for SolarWinds’s network management software was reported to be “solarwinds123.”

...

As the economics writer Matt Stoller has suggested, cybersecurity is a natural area for a technology company to cut costs because its customers won’t notice unless they are hacked — and if they are, they will have already paid for the product. In other words, the risk of a cyberattack can be transferred to the customers."

Former SolarWinds CEO blames intern for 'solarwinds123' password leak by Brian Fung and Geneva Sands reports on SolarWinds' desperate spinning:

ReplyDelete"Current and former top executives at SolarWinds are blaming a company intern for a critical lapse in password security that apparently went undiagnosed for years.

The password in question, "solarwinds123," was discovered in 2019 on the public internet by an independent security researcher who warned the company that the leak had exposed a SolarWinds file server.

...

Neither Thompson nor Ramakrishna explained to lawmakers why the company's technology allowed for such passwords in the first place.

Ramakrishna later testified that the password had been in use as early as 2017.

...

Emails between Kumar and SolarWinds showed that the leaked password allowed Kumar to log in and successfully deposit files on the company's server. Using that tactic, Kumar warned the company, any hacker could upload malicious programs to SolarWinds."

Now that's a high-value target! Dan Goodin reports that Backdoored password manager stole data from as many as 29K enterprises:

ReplyDelete"As many as 29,000 users of the Passwordstate password manager downloaded a malicious update that extracted data from the app and sent it to an attacker-controlled server, the app maker told customers.

In an email, Passwordstate creator Click Studios told customers that bad actors compromised its upgrade mechanism and used it to install a malicious file on user computers. The file, named “moserware.secretsplitter.dll,” contained a legitimate copy of an app called SecretSplitter, along with malicious code named "Loader," according to a brief writeup from security firm CSIS Group.

...

The Passwordstate breach underscores the risk posed by password managers because they represent a single point of failure that can lead to the compromise of large numbers of online assets. The risks are significantly lower when two-factor authentication is available and enabled because extracted passwords alone aren’t enough to gain unauthorized access."

The effectiveness of supply chain attacks is aptly illustrated by Dan Goodin's Up to 1,500 businesses infected in one of the worst ransomware attacks ever:

ReplyDelete"As many as 1,500 businesses around the world have been infected by highly destructive malware that first struck software maker Kaseya. In one of the worst ransom attacks ever, the malware, in turn, used that access to fell Kaseya’s customers.

The attack struck on Friday afternoon in the lead-up to the three-day Independence Day holiday weekend in the US. Hackers affiliated with REvil, one of ransomware’s most cutthroat gangs, exploited a zero-day vulnerability in the Kaseya VSA remote management service, which the company says is used by 35,000 customers. The REvil affiliates then used their control of Kaseya’s infrastructure to push a malicious software update to customers, who are primarily small-to-midsize businesses."

Jody Godoy's SolarWinds investors allege board knew about cyber risks reports that:

ReplyDelete"Led by a Missouri pension fund, the investors allege that the board failed to implement procedures to monitor cybersecurity risks, such as requiring the company's management to report on those risks regularly.

They are seeking damages on behalf of the company and to reform the company's policies on cybersecurity oversight."

Tm Starks reports that SEC: SolarWinds failed to disclose cybersecurity woes before historic breach:

ReplyDelete"In a complaint filed in the Southern District of New York, the SEC contends that SolarWinds and the company’s chief information security officer, Tim Brown, repeatedly violated the antifraud disclosure and internal controls provisions of federal securities law by not disclosing vulnerabilities that it knew could lead to a hack.

Later, SolarWinds suffered a breach of its network monitoring software, Orion, that allowed suspected Russian government-connected hackers to infiltrate thousands of customer organizations that included nine federal agencies.

...

“Dating back to at least October 2018, when SolarWinds conducted its [initial public offering] continuing through at least December 2020, SolarWinds and/or Brown made materially false and misleading statements and omissions related to SolarWinds securities risks and practices in at least three types of public disclosures,” the SEC complaint says."

Matt Levine's take on the SEC's suit against SolarWinds is here:

ReplyDelete"Everything is securities fraud, and using “password” as your password is securities fraud, but using “password” as your password is especially securities fraud if one of your engineers sends an email saying that it is “pretty well amateur hour.” But it is pretty well amateur hour! It is helpful for someone to point that out. But it gets you sued."