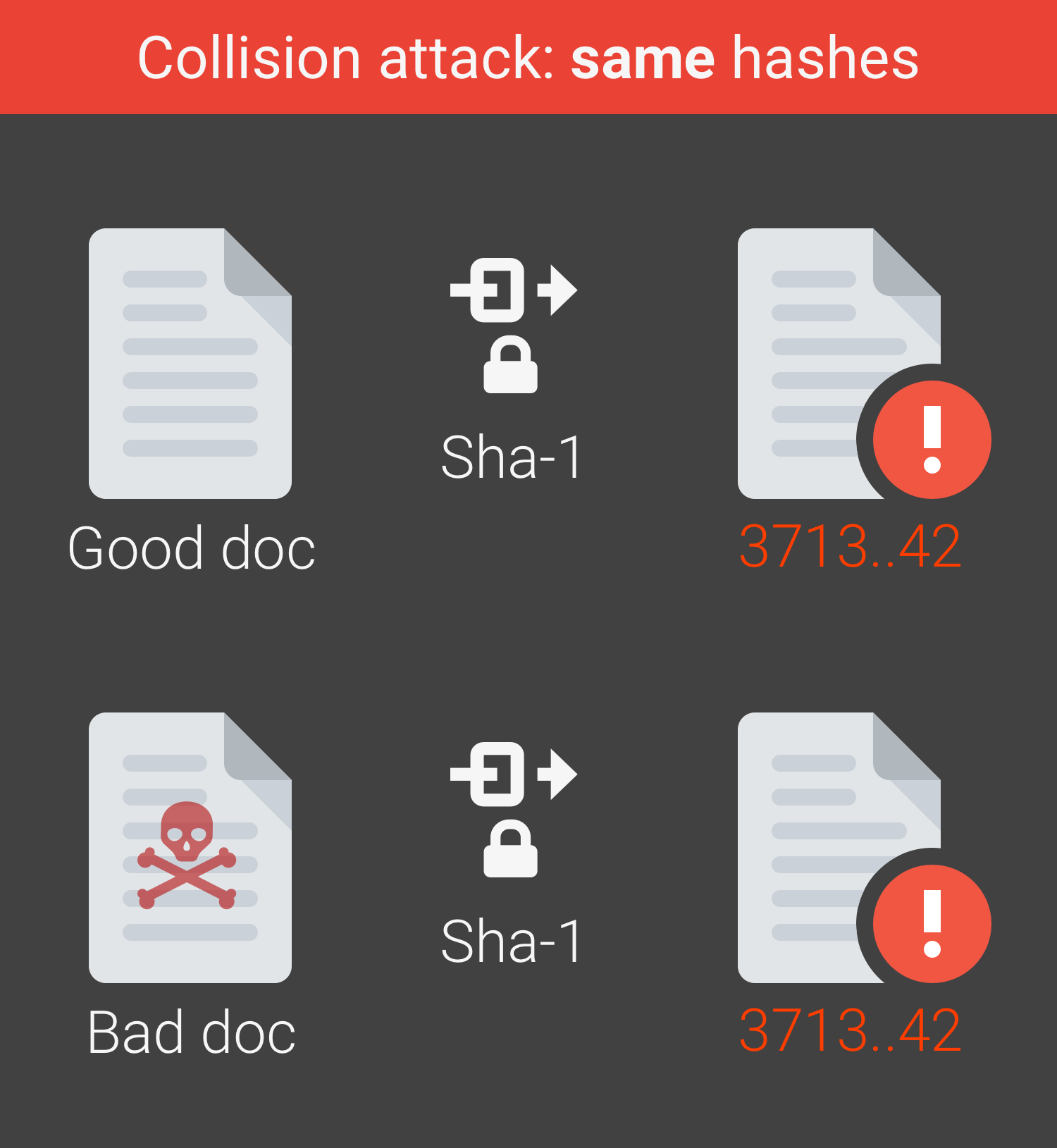

There is an accessible report on this paper by Dan Goodin at Ars Technica. These collisions have already caused trouble for systems in the field, for example for Webkit's Subversion repository. Subversion and other systems use SHA-1 to deduplicate content; files with the same SHA-1 are assumed to be identical. Below the fold, I look at the implications for digital preservation.

|

| SHA-1 Collision (source) |

Conducted using Amazon's "spot pricing" of otherwise idle machines the team's attack would cost about $110K. This attack is less powerful than a chosen-prefix attack, in which a second colliding file is created for an arbitrary first file. 2012's Flame malware used a chosen-prefix attack on MD5 to hijack the Windows update mechanism.

As we have been saying for more than a decade, the design of digital preservation systems must start from a threat model. Clearly, there are few digital preservation systems whose threat model currently includes external or internal evil-doers willing to spend $110K on an identical-prefix attack. Which, in any case, would require persuading the preservation system to ingest a file of the attacker's devising with the appropriate prefix.

But the attack, and the inevitability of better, cheaper techniques leading to chosen-prefix attacks in the future illustrate the risks involved in systems that use stored hashes to verify integrity. These systems are vulnerable to chosen-prefix attacks, because they allow content to be changed without causing hash mis-matches.

The LOCKSS technology is an exception. LOCKSS boxes do not depend on stored hashes and are thus not vulnerable to identical-prefix or even chosen-prefix attacks on their content. The system does store hashes (currently SHA-1), but uses them only as hints to raise the priority of polls on content if the hashes don't match. The polling system is currently configured to use SHA-1, but each time it prepends different random nonces to the content that is hashed, mitigating these attacks.

If an attacker replaced a file in a LOCKSS box with a SHA-1 colliding file, the next poll would not be given higher priority because the hashes would match. But when the poll took place the random nonces would ensure that the SHA-1 computed would not be the collision hash. The damage would be detected and repaired. The hashes computed during each poll are not stored, they are of no value after the time for the poll expires. For details of the polling mechanism, see our 2003 SOSP paper.

There are a number of problems with stored hashes as an integrity preservation technique. In Rick Whitt on Digital Preservation I wrote:

The long timescale of digital preservation poses another problem for digital signatures; they fade over time. Like other integrity check mechanisms, the signature attests not to the digital object, but to the hash of the digital object. The goal of hash algorithm design is to make if extremely difficult with foreseeable technology to create a different digital object with the same hash, a collision. They cannot be designed to make this impossible, merely very difficult. So, as technology advances with time, it becomes easier and easier for an attacker to substitute a different object without invalidating the signature. Because over time hash algorithms become vulnerable and obsolete, preservation system depending for integrity on preserving digital signatures, or even just hashes, must routinely re-sign, or re-hash, with a more up-to-date algorithm.When should preservation systems re-hash their content? The obvious answer is "before anyone can create collisions", which raises the question of how the preservation system can know the capabilities of the attackers identified by the system's threat model. Archives whose threat model leaves out nation-state adversaries are probably safe if they re-hash and re-sign as soon as the open literature shows progress toward a partial break, as it did for SHA-1 in 2005.

The use of stored hashes for integrity checking has another, related problem. There are two possible results from re-computing the hash of the content and comparing it with the stored hash:

- The two hashes match, in which case either:

- The hash and the content are unchanged, or

- An attacker has changed both the content and the hash, or

- An attacker has replaced the content with a collision, leaving the hash unchanged.

- The two hashes differ, in which case:

- The content has changed and the hash has not, or

- The hash has changed and the content has not, or

- Both content and hash have changed.

Traditionally, this problem has been attacked by the use of Merkle trees, trees in which each parent node contains the hash of its child nodes. Notice that this technique does not remove the need to detect change in a string of bits, but by hashing hashes it can reduce the size of the bit string.

The possibility that the hash algorithm used for the Merkle tree could become vulnerable to collisions is again problematic. If a bogus sub-tree could be synthesized that had the same hash at its root as a victim sub-tree, the entire content of the sub-tree could be replaced undetectably.

One piece of advice for preservation systems using stored hashes is, when re-hashing with algorithm B because previous algorithm A has been deprecated, to both keep the A hashes and verify them during succeeding integrity checks. It is much more difficult for an attacker to create files that collide for two different algorithms. For example, using the team's colliding PDF files:

$ sha1sum *.pdf 38762cf7f55934b34d179ae6a4c80cadccbb7f0a shattered-1.pdf 38762cf7f55934b34d179ae6a4c80cadccbb7f0a shattered-2.pdf $ md5sum *.pdf ee4aa52b139d925f8d8884402b0a750c shattered-1.pdf 5bd9d8cabc46041579a311230539b8d1 shattered-2.pdf

Clearly, keeping the A hashes is pointless unless they are also verified. The attacker will have ensured that the B hashes match, but will probably not have expended the vastly greater effort to ensure that the A hashes also match.

Stored hashes from an obsolete algorithm are in practice adequate to detect random bit-rot, but as we see they can remain useful even against evil-doers. Archives whose threat model does not include a significant level of evil-doing are unlikely to survive long in today's Internet.

In PGP keys, software security, and much more threatened by new SHA1 exploit, Dan Goodin reports that SHA1 is now even dead-er:

ReplyDelete"The attack demonstrated Tuesday is the first known chosen prefix collision on SHA1. To demonstrate its potency, researchers Gaëtan Leurent and Thomas Peyrin of Inria France and the Nanyang Technological University in Singapore respectively, used the collision to perform a PGP/GnuPG impersonation attack."