| DEC 340 display |

|

| Virtua Fighter on NV1 |

| GeForce GTX280 |

The wheel also applies to graphics and user interface software. The early window systems, such as SunView and the Andrew window system, were libraries of fixed functions, as was the X Window System. James Gosling and I tried to move a half-turn around the wheel with NeWS, which was a user interface environment fully programmable in PostScript, but we were premature.

|

| news.bbc.co.uk 12/1/98 |

|

| Visiting the New York Times |

Kris and I organized a workshop last year to look at the problems the evolution of the Web poses for attempts to collect and preserve it. Here is the list of problem areas the workshop identified:

- Database driven features & functions

- Complex/variable URI formats & inconsistent/variable link implementations

- Dynamically generated, ever changing, URIs

- Rich Media

- Scripted, incremental display & page loading mechanisms

- Scripted, HTML forms

- Multi-sourced, embedded material

- Dynamic login/auth services: captchas, cross-site/social authentication, & user-sensitive embeds

- Alternate display based on user agent or other parameters

- Exclusions by convention

- Exclusions by design

- Server side scripts & remote procedure calls

- HTML5 "web sockets"

- Mobile publishing

|

| Not an article from Graft |

|

| An article from Graft |

When someone says HTML, you tend to think of a page like this, which looks like vanilla HTML but is actually an HTML5 geolocation demo. Most of what you actually get from Web servers now is programs, like the nearly 12KB of Javascript included by the three lines near the top. This is the shortest of the three files. A crawler collecting the page can't just scan the content to find the links to the next content it needs to request. It has to execute the program to find the links, which raises all sorts of issues.

The program may be malicious, so its execution needs to be carefully sandboxed. Even if it doesn't intend to be malicious, its execution will take an unpredictable amount of time, which can amount to a denial-of-service attack on the crawler. How many of you have encountered Web pages that froze your browser? Executing may not be slow enough to amount to an attack, but it will be a lot more expensive than simply scanning for links. Ingesting the content just got a lot more expensive in compute terms, which doesn't help the whole economic sustainability is job #1 issue.

It is easy to say, execute the content. But the execution of the content depends on the inputs the program obtains, in this case the geolocation of the crawler and the weather there at the time of the crawl. In general these inputs depend on, for example, the set of cookies and the contents of HTML5's local storage in the "browser" the crawler is emulating, the state of all the external databases the program may call upon, and the user's inputs. So the crawler has not merely to run the program, it has to emulate a user mousing and clicking everywhere in the page in search of behaviors that trigger new links.

But we're not just finding links for its own sake, we want to preserve those links and the content they point to for future dissemination. If we preserve the programs for future re-execution we also have to preserve some of their inputs, such as the responses to database queries, and supply those responses at the appropriate times during the re-execution of the program. Other inputs, such as mouse movements and clicks, have to be left to the future reader to supply. This is very tricky, including as it does issues such as faking secure connections.

Re-executing the program in the future is a very fragile endeavour. This isn't because the Javascript virtual machine will have become obsolete. It is well-supported by an open source stack. It is because it is very difficult to be sure which are the significant inputs you need to capture, preserve, and re-supply. A trivial example is a Javascript program that displays the date. Is the correct preserved behavior to display the date when it was ingested, to preserve the original user experience? Or is it to display the date when it will be re-executed, to preserve the original functionality? There's no right answer.

Among the projects exploring the problems of preserving executable objects are:

- Olive at C-MU, which is preserving virtual machines containing the executable object, but not I believe their inputs.

- The EU-funded Workflow 4Ever project, which is trying to encapsulate scientific workflows and adequate metadata for their later re-use into Research Objects. The metadata includes sample datasets and the corresponding results, so that correct preservation can be demonstrated. Generating the metadata for re-execution of a significant workflow is a major effort (PDF).

An alternative that preserves the user experience but not the functionality is, in effect, to push the system one more half-turn around the wheel, reducing the content to fixed-function primitives. Not to try to re-execute the program but to try to re-render the result of execution. The YouTube of Virtua Fighter is a simple example of this kind. It may be the best that can be done to cope with the special complexities of video games.

In the re-render approach, as the crawler executed the program it would record the display, and build a map of its sensitive areas with the results of activating each of them. You can think of this as a form of pre-emptive format migration, an approach that both Jeff Rothenberg and I have argued against for a long time. As with games, it may be that this, while flawed, is the best we can do with the programs on the Web we have.

|

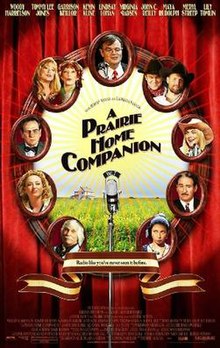

| A Prairie Home Companion |

|

| The Who Sell Out |

The programs that run in your browser these days also ensure that every visit to a web page is a unique experience, full of up-to-the-second personalized content. The challenge of preserving the Web is like that of preserving theatre or dance. Every performance is a unique and unrepeatable interaction between the performers, in this case a vast collection of dynamically changing databases, and the audience. Actually, it is even worse. Preserving the Web is like preserving a dance performed billions of times, each time for an audience of one, who is also the director of their individual performance.

We need to ask what we're trying to achieve by preserving Web content. We haven't managed to preserve everything so far, and we can expect to preserve even less going forward. There are a range of different goals:

- At one extreme, the Internet Archive's Wayback Machine tries to preserve samples of the whole Web. Although I was skeptical when Brewster explained what he wanted to do, I was wrong. A series of samples of the Web through time turns out to be an incredibly valuable resource. In the early days the unreliability of HTTP and the simplicity of the content meant that the sample was fairly random; the difficulties caused by the evolution of the Web mean a gradually increasing systematic bias.

- The LOCKSS Program, at the other extreme, samples in another way. It tries to preserve everything about a carefully selected set of Web pages, mostly academic journals. The sample is created by librarians selecting journals; it is a sample because we don't have the resources to preserve every academic journal even if there was an agreed definition of that term. Again, the evolution of the Web is making this job gradually more and more difficult.

Hi David,

ReplyDeleteYou seem to be suggesting that some sort of emulation based solution (n conjunction with migration-based solutions) will be necessary for preserving some types of websites.

I'm not sure if you have heard about the work the bwFLa team have been doing on Emulation as a Service (EaaS) but you might be interested.

There are details here, here, and here.

We do a great deal of work with net.art, ranging from the simple (flat hypertext) to the complex (data streams interpreted by server-side processes) to the utterly insane (MP3s called by QuickTime videos as played in ShockWave objects as invoked in JavaScript windows).

ReplyDeleteSince we deal with a very narrow focus, I can afford to spend as much time (and work as many heroics) as necessary to document and archive these WWW-based objects. Speaking coarsely, what I've found is:

-Some sites are lost causes for client-side archiving. If you only care about generally acquiring content, this can sometimes be sidestepped (e.g. PHP-driven background carousels), but maintaining functionality is impossible in these cases. It's a tradeoff you have to decide on - get something quickly (maybe) or get everything slowly (usually contacting site owners, which isn't always realistic)

-Sites that can be fully archived client-side requires a considerable time and expertise investment. Artisanally archiving a site is not unlike picking a lock: it requires mentally constructing the interior from your few tools, along with a fair amount of intuition and luck. Doing it at scale, even with industry-standard tools, is out of the question. I documented an example of a non-machine-readable site here

The Internet Archive's standard of good-enough is about the best that can be done at scale. Combined with documentation (so that what we recall about the Web in the 00s, but wasn't captured, isn't lost), this seems to be the only reasonable way to preserve the Web at scale.

Euan, that isn't at all what I am suggesting. I can't even see what it is that you think would need to be emulated. Javascript? There is no need to emulate Javascript. Whether the current Javascript implementations survive into the future, or whether for some bizarre reason we have to run them on an emulator, has no effect whatsoever on the problems Kris and I discussed in our talks.

ReplyDelete<rant>This obsession with Rothenberg's emulation vs. migration dichotomy really has to stop. It is making rational discussion of the problems of digital preservation impossible. Emulation and migration are proposed solutions to the problem of format obsolescence. As far as the Web is concerned, there is no problem of format obsolescence.

Has anyone out there heard of this process called "science"? The way it works is that people come up with theories about how the world behaves. Based on these theories they make predictions about the future. Then we wait to see whether these predictions come true.

If one of the competing theories makes predictions that come true in the real world, and one makes predictions that don't come true, then the one with the bad predictions is discredited and we stop using it, because using it has led us into error.

Science is really a neat idea, and we have a good example of science in the case of format obsolescence. Starting around 1995, some people had a theory of format obsolescence that, based on history from the pre-Web era, format obsolescence was imminent. Starting around 1998, I had a theory, based on the history of network protocols and open source software, that format obsolescence in the Web environment would be an extremely slow process, if it happened at all. Both sides made public predictions, in my case on this blog repeatedly since 2007 and in other forums since at least 2005.

Last fall, an experiment was published by Matt Holden of INA that showed that my prediction was correct and the prediction of imminent format obsolescence, at least for Web formats, was not correct. Matt showed that Web formats, even for high-risk audiovisual content, had not gone obsolete in more than 15 years.

In the light of this experiment, we can be confident in saying that format obsolescence is not a significant risk in preserving the Web. Arguing about migration vs. emulation in the context of Web preservation is a distraction from addressing the significant problems. In the context of the Web, the theory of imminent format obsolescence is not useful because it is wrong. Proponents of this theory need to focus on fixing their theory so that it predicts no format obsolescence on the Web in more than 15 years.

Kris and my talks were intended to draw attention to the significant problems with current Web preservation. These are that the direction in which the Web is evolving means we are less and less able to collect Web content for preservation, and less and less able to accurately reproduce it for future access. Neither of these problems is addressed by either migration (note that my reference to migration was an analogy - I don't really think that the YouTube video of Virtua Fighter is a migration of the game) or emulation.</rant>

Alexander - I agree with most of your comment except the last paragraph. Clearly, at scale it isn't possible to do the detailed per-site work that you do, or that gets built in to the LOCKSS system's plugins for particular academic publishing platforms.

ReplyDeleteI don't know if this is what you intended, but your last paragraph implies that you think that going forward the Internet Archive and other at-scale archives preserving samples of the Web using similar technology will be able to continue doing about as good a job as they have in the past. Kris' and my talks were intended to convey the message that this isn't the case. The evolution of the Web means that the current technology is gradually losing effectiveness. Although efforts, such as the ones Kris described in her talk, are being made to develop new technologies the best it seems they can achieve is to slow the loss in effectiveness, not to reverse it.

Hi David,

ReplyDeleteI tried to reply again here but i ran over the 4000 character limit. I've posted another reply on my blog here.

Euan's blog post in response to my rant makes it clear that I need to be more explicit.

ReplyDeleteNote that here we are discussing only Web content, not general digital content. There are two types of problem that the evolution of the Web poses for collecting and preserving Web content: collecting it and disseminating it.

The docoment from last year's workshop, and Kris's talk were all about the problems the evolution of the Web poses for collecting the content. Unless the content can be collected it cannot in the future be disseminated. Emulation is simply irrelevant to the problems of collecting Web content. Migration is irrelevant to these problems too, in that in order to migrate content you need to be able to collect it first.

In my talk I also discussed some problems of future dissemination, mostly the fact that we have to decide whether we are preserving the functionality or the user experience of the content. This is a far deeper question than whether to use emulation or migration.

What triggered my rant was that it appeared from his first comment that Euan's thinking about digital preservation was so captured by the emulation vs. migration dichotomy that he couldn't see that, although I was discussing digital preservation, the dichotomy was irrelevant to my talk even if format obsolescence was imminent.

Yet we know that format obsolescence is not imminent. So the emulation issue was doubly irrelevant, since the problem it addresses isn't going to be significant in the foreseeable future, whereas the problems that Kris & I described are significant problems right now. The variety of approaches I commended in my conclusion refers not to doing both emulation and migration, as neither is necessary, but to a variety of approaches to collecting the content in order to mitigate the systematic sampling bias caused by almost everyone using the same crawler.

So here I am arguing about emulation vs. migration instead of about the significant problems facing the preservation of Web content right now. As my rant said "This obsession with Rothenberg's emulation vs. migration dichotomy really has to stop. It is making rational discussion of the problems of digital preservation impossible". At least in the case of Web content, I rest my case.

And, yes, I understand that much of Euan's post relates to Rothenberg Still Wrong not to this post. I'll address that part another time.

CNI has posted the video of Kris' and my talks on YouTube and Vimeo.

ReplyDeleteAnd I'm sorry there's been a delay in responding to Euan's comments. It's obviously going to be a long post and I have been hard up against too many deadlines to give it the attention it deserves.